When it comes to artificial intelligence (AI) and elections, there has been no shortage of doomsayers. Technologists warned it would hijack democracy. A think tank predicted a tidal wave of misinformation that would leave voters, "completely at a loss." One congresswoman called it "the most significant threat to democracy that the world has seen in a generation."

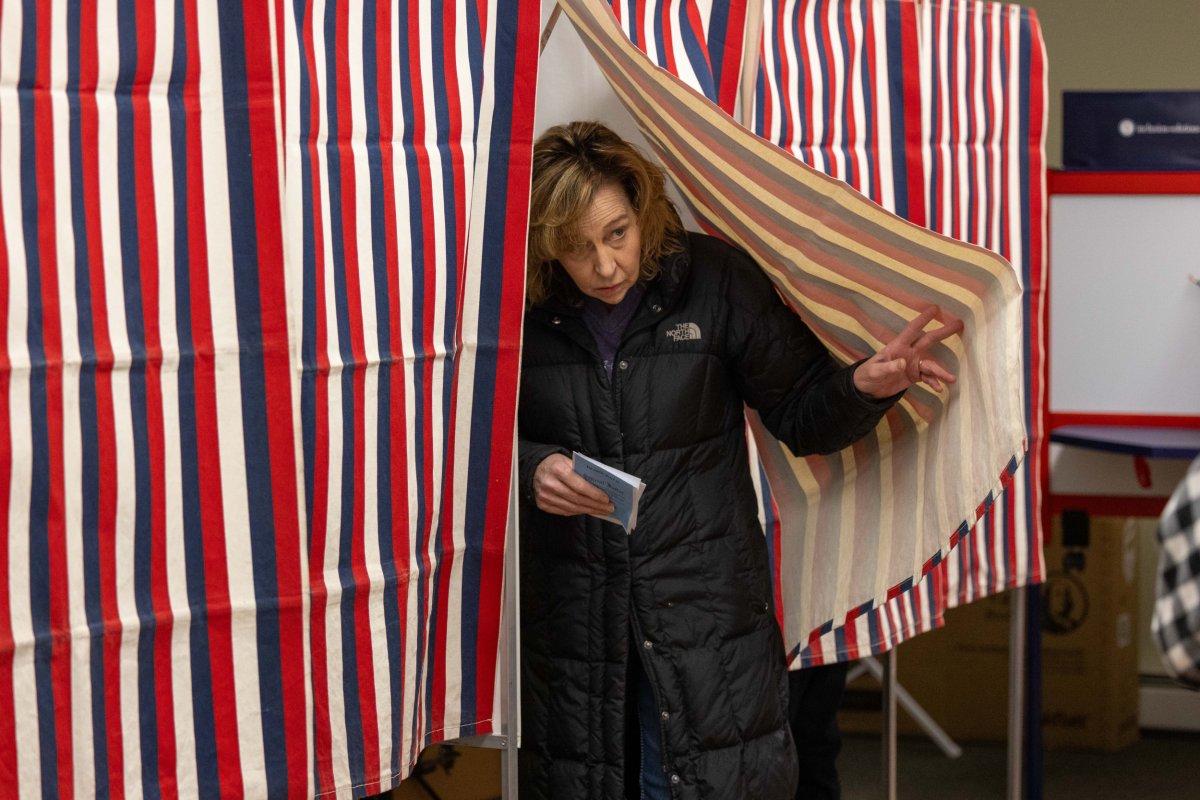

But the presidential primaries have come and gone, tens of millions of voters cast their ballots, and we can now look back on the first leg of the 2024 presidential election and say with confidence, it went fine.

AI's biggest appearance in the 2024 primaries was a robocall in New Hampshire that used a deepfake of President Joe Biden's voice to urge voters against turning out on primary day. The call was quickly exposed for what it was and its perpetrator is now facing a federal lawsuit, because spreading election disinformation is already illegal, with or without AI.

Meanwhile, the New Hampshire primary saw record turnout, including Democratic turnout that clobbered turnout for former President Barack Obama in the 2012 primary—the last time Democrats had an incumbent president up for election. And Biden didn't even campaign in the state.

The largest category of AI use in this year's election tends to be public officials using AI to warn about the dangers of AI. In Georgia, a state legislator made a deepfake of another lawmaker to prove that deepfakes are bad. In Arizona, election policymakers have similarly used deepfakes to train staff and warn the public.

This isn't to say that AI hasn't been used nefariously anywhere in the world or that AI misinformation won't make another appearance in this year's presidential election. Abroad, we've seen AI misinformation pop up at election time in countries from Moldova to Slovakia to Bangladesh.

But it's fallen substantially short of the catastrophic pronouncements that AI doomers made in the run-up to this year's elections. So why did the projected AIpocalypse never come for this year's primary?

For one, U.S. democracy isn't new to misinformation. Misleading campaign attacks are an American tradition that dates to before AI and even before Photoshop. Back in 1800, rumors abound that if Thomas Jefferson won the presidency, he would wage a war on religion and confiscate family bibles. More recently, Fox News has aired lies about everything from election results to climate change to bogus FBI informants, all without the help of AI.

The result of all that misinformation is skepticism among the public and an awareness of misinformation coming even from trusted news sources. Seven in 10 Americans are aware that they've likely been exposed to misinformation, while 67 percent say they've seen their own news sources report facts meant to favor one side. While the distrust bred by decades of misinformation isn't healthy for our public discourse, it does provide a natural armor against AI misinformation.

The other reason AI misinformation has failed to be the election killer some policymakers predicted is thanks to the efforts that online platforms have made to downrank and remove misinformation and political content altogether. In 2021, Meta pursued a broad takedown of misinformation globally, an effort that research found decreased the consumption and production of hate content on Facebook. And since the rise of AI, digital platforms from YouTube to X/Twitter to Microsoft have all launched crackdowns targeting deepfakes.

It's worth recognizing that federal laws already prohibit spreading election misinformation. Section 241 of Title 18 makes it unlawful to "conspire to injure, oppress, threaten, or intimidate any person" exercising a constitutional right, including the right to vote. Already, the DOJ has successfully used that law to prosecute the online spread of election misinformation, convicting an influencer who attempted to trick people into voting by text message in 2016.

While the infamous New Hampshire robocall gained national attention for its use of AI, law enforcement has a number of options for prosecuting the perpetrator of the call under existing law.

It's unlikely that making additional laws banning misinformation specifically created by AI will dissuade bad actors not already dissuaded by federal voting rights laws. Instead, policymakers should focus on enforcement and funding for the laws already on the books.

Looking ahead to the general election, there will continue to be warnings of an imminent AIpocalypse. This isn't to say that state election officials should be unprepared for foreign interference attempts—they should. But calls to shut down access to an empowering tech innovation over an imagined election catastrophe should be taken—just as Americans take their political news—with a healthy dose of skepticism.

Adam Kovacevich is founder and CEO of the center-left tech industry coalition Chamber of Progress. Adam has worked at the intersection of tech and politics for 20 years, leading public policy at Google and Lime and serving as a Democratic Hill aide.

The views expressed in this article are the writer's own.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.