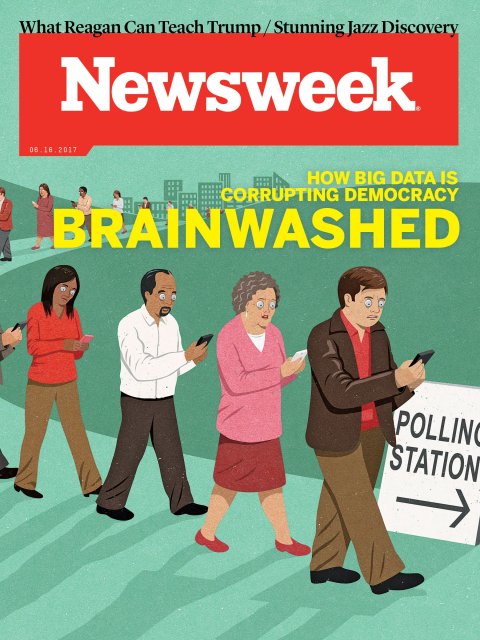

The opening chords of Creedence Clearwater Revival's "Bad Moon Rising" rocked a hotel ballroom in New York City as a nattily dressed British man strode onstage several weeks before last fall's U.S. election.

I see the bad moon rising,

I see trouble on the way

The speaker, Alexander Nix, an Eton man, was very much among his own kind—global elites with names like Buffett, Soros, Brokaw, Pickens, Petraeus and Blair. Trouble was indeed on the way for some of the attendees at the annual summit of policymakers and philanthropists whose world order was about to be wrecked by the American election. But for Nix, chief executive officer of a company working for the Trump campaign, that mayhem was a very good thing.

He didn't mention it that day, but his company, Cambridge Analytica, had been selling its services to the Trump campaign, which was building a massive database of information on Americans. The company's capabilities included, among other things, "psychographic profiling" of the electorate. And while Trump's win was in no way assured on that afternoon, Nix was there to give a cocky sales pitch for his cool new product.

"It's my privilege to speak to you today about the power of Big Data and psychographics in the electoral process," he began. As he clicked through slides, he explained how Cambridge Analytica can appeal directly to people's emotions, bypassing cognitive roadblocks, thanks to the oceans of data it can access on every man and woman in the country.

After describing Big Data, Nix talked about how Cambridge was mining it for political purposes, to identify "mean personality" and then segment personality types into yet more specific subgroups, using other variables, to create ever smaller groups susceptible to precisely targeted messages.

To illustrate, he walked the audience through what he called "a real-life example" taken from the company's data on the American electorate, starting with a large anonymous group with a general set of personality types and moving down to the most specific—one man, it turned out, who was easily identifiable.

Nix started with a group of 45,000 likely Republican Iowa caucusgoers who needed a little push—what he calls a "persuasion message"—to get out and vote for Ted Cruz (who used Cambridge Analytica early in the 2016 primaries). That group's specifics had been fished out of the data stream by an algorithm sifting the thousands of digital data points of their lives. Nix was focusing on a personality subset the company's algorithms determined to be "very low in neuroticism, quite low in openness and slightly conscientious."

Click. A screen of graphs and pie charts.

"But we can segment further. We can look at what issue they care about. Gun rights I've selected. That narrows the field slightly more."

Click. Another screen of graphs and pie charts, but with some circled specifics.

"And now we know we need a message on gun rights. It needs to be a persuasion message, and it needs to be nuanced according to the certain personality type we are interested in."

Click. Another screen, the state of Iowa dotted with tiny reds and blues—individual voters.

"If we wanted to drill down further, we could resolve the data to an individual level, where we have somewhere close to 4- or 5,000 data points on every adult in the United States."

Click. Another screenshot with a single circled name—Jeffrey Jay Ruest, gender: male, and his GPS coordinates.

RELATED: Difficulties of teaching Wall Street Big Data

The American voter whose psychological tendencies Nix had just paraded before global elites like a zoo animal was easy to find. Cambridge researchers would have known much more about him than his address. They probably had access to his Facebook likes—heavy metal band Iron Maiden, a news site called eHot Rods and Guns, and membership in Facebook groups called My Daily Carry Gun and Mopar Drag Racing.

"Likes" like those are sine qua non of the psychographic profile.

And like every other one of the hundreds of millions of Americans now caught in Cambridge Analytica's slicing and dicing machine, Ruest was never asked if he wanted a large swath of his most personal data scrutinized so that he might receive a message tailored just for him from Trump.

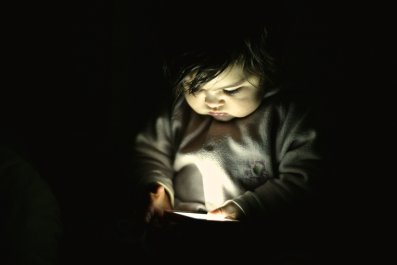

Big Data, artificial intelligence and algorithms designed and manipulated by strategists like the folks at Cambridge have turned our world into a Panopticon, the 19th-century circular prison designed so that guards, without moving, could observe every inmate every minute of every day. Our 21st-century watchers are not just trying to sell us vacations in Tuscany because they know we have Googled Italy or bought books about Florence on Amazon. They exploit decades of behavioral science research into the flawed, often irrational ways human beings make decisions to subtly "nudge" us—without our noticing it—toward one candidate. Of all the horror-movie twists and scary-monster turns in real life these days, from murderous religious warriors to the Antarctic melting to the rise of little Hitlers all over the world, one of the creepiest is the certainty that machines know more about us than we do and that they could, in the near future, deliver the first AI president—if they haven't already.

Unknow Thyself

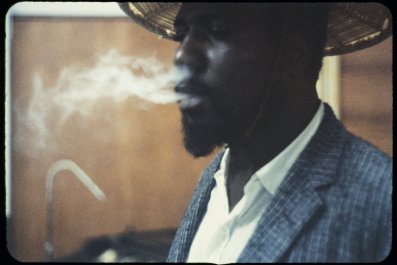

When CIA officer Frank Wisner created Operation Mockingbird in 1948—the CIA's first media manipulation effort—he boasted that his network was "a mighty Wurlitzer" capable of manipulating facts and public opinion at home and around the world. The power and stress of managing that virtual machine soon drove Wisner bug-eyed mad, and he killed himself.

But far mightier versions of that propaganda Wurlitzer exist today, powered by a gusher of raw, online personal information that is fed into machines and then analyzed by algorithms that personalize political messages for ever-smaller groups of like-minded people. Vast and growing databases compiled for commerce and policing are also for sale to politicians and their strategists, who can now know more about you than your spouse or parents. The KGB and the Stasi, limited to informants, phone tapping and peepholes, could only have dreamed of such snooping superpowers.

Anyone can try it out: Cambridge University, where the Cambridge Analytica research method was conceived, is not commercially connected to the company, but the school's website allows you to see how Facebook-powered online psychography works. At ApplyMagicSauce.com, the algorithm (after obtaining Facebook user consent) does what Cambridge Analytica did before the last U.S. election cycle when it made tens of millions of "friends" by first employing low-wage tech-workers to hand over their Facebook profiles: It spiders through Facebook posts, friends and likes, and, within a matter of seconds, spits out a personality profile, including the so-called OCEAN psychological tendencies test score (openness, conscientiousness, extraversion, agreeableness and neuroticism). (This reporter's profile was eerily accurate: It knew I was slightly more "liberal and artistic" than "conservative and traditional," that I have "healthy skepticism," and am "calm and emotionally stable." It got my age wrong by a decade or so, and while I'd like to think that's because I'm preternaturally youthful, it could also be because I didn't put my birth year in Facebook.)

Cambridge Analytica, with its mass psychographic profiling, is in the same cartoonishly dark arts–y genre with several other Trump campaign operators, including state-smashing nationalist Steve Bannon, now a top White House adviser, and political strategist Roger Stone, a longtime Republican black-ops guy. Bannon sat on the board of Cambridge, and his patron, conservative billionaire Robert Mercer, whose name is rarely published without the adjective "shadowy" nearby, reportedly owns 90 percent of it.

But Cambridge was just one cog in the Trump campaign's large data mining machine. Facebook was even more useful for Trump, with its online behavioral data on nearly 2 billion people around the world, each of whom is precisely accessible to strategists and marketers who can afford to pay for the peek. Team Trump created a 220 million–person database, nicknamed Project Alamo, using voter registration records, gun ownership records, credit card purchase histories and the monolithic data vaults Experian PLC, Datalogix, Epsilon and Acxiom Corporation. First son-in-law Jared Kushner saw the power of Facebook long before Trump was named the Republican candidate for president. By the end of the 2016 campaign, the social media giant was so key to Trump's efforts that its data team designated a Facebook employee named James Barnes the digital campaign's MVP.

They were hardly the first national campaign to do something like that: The Democratic National Committee has used Catalist, a 240 million–strong storehouse of voter data, containing hundreds of points of data per person, pulled from commercial and public records.

RELATED: Apple's flaw? It doesn't know enough about you

But that was back in ancient times, before Facebook had Lookalike audiences, and AI and algorithms were able to parse the electorate into 25-person interest groups. And by 2020, you can bet the digital advances of 2016 will look like the horse and buggy of political strategy.

Dr. Spectre's Echo Chambers

Among the many services Facebook offers advertisers is its Lookalike Audiences program. An advertiser (or a political campaign manager) can come to Facebook with a small group of known customers or supporters, and ask Facebook to expand it. Using its access to billions of posts and pictures, likes and contacts, Facebook can create groups of people who are "like" that initial group, and then target advertising made specifically to influence it.

The marriage of psychographic microtargeting and Facebook's Lookalike program was the next logical step in a tactic that goes back at least to 2004, when Karl Rove initiated electoral microtargeting by doing things like identifying Amish people in Ohio, then getting them so riled up about gay marriage that they raced their buggies to the polls to vote for the first time ever.

Since then, the ability of machines and algorithms to analyze and sort the American electorate has increased dramatically. Now, with the help of Big Data, strategists can, with a click of a mouse or keypad, apply for and get your relative OCEAN score. Psychographic analyses don't even require Facebook; computers can sort people psychologically using thousands of commercially available data points and then run their profiles against people who have actually taken the tests.

When Barack Obama ran for president in 2008, his campaign was credited with mastering social media and data mining. Four years later, in 2012, the Obama campaign tested new possibilities when it ranked the "persuadability" of specific groups and conducted experiments combining phone calls and demographic analysis of how well messages worked on them.

By 2012, there had been huge advances in what Big Data, social media and AI could do together. That year, Facebook conducted a happy-sad emotional manipulation experiment, splitting a million people into two groups and manipulating the posts so that one group received happy updates from friends and another received sad ones. They then ran the effects through algorithms and proved—surprise—that they were able to affect people's moods. (Facebook, which has the greatest storehouse of personal behavior data ever amassed, is still conducting behavioral research, mostly, again, in the service of advertising and making money. In early May, leaked documents from Facebook's Australia office showed Facebook telling advertisers how it could identify emotional states, including "insecure teens," to better target products.)

By 2013, scientists at Cambridge University were experimenting with how Facebook could be used for psychographic profiling—a methodology that eventually went commercial with Cambridge Analytica. One of the scientists involved in commercializing the research, American researcher Aleksandr Kogan, eventually gained access to 30 million Facebook profiles for what became Cambridge Analytica. No longer affiliated with the company, he has moved to California, legally changed his name to Aleksandr Spectre (which had nothing to do with James Bond, but was about finding a "non-patriarchal" name to share with his new wife) and set up a Delaware corporation selling data from his online questionnaires and surveys—another, slightly more transparent method to Hoover up personal information online.

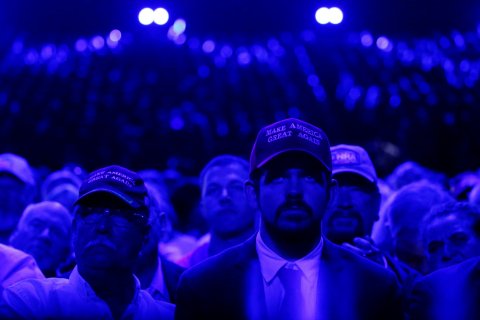

The 2016 election, sometimes now called the Facebook election, saw entirely new capabilities applied by Facebook, beyond Cambridge Analytica's experiments. Trump might well have been elected before social media existed, but advances in data collection, and the relative lawlessness regarding privacy in the United States (more on that later), enabled the most aggressive microtargeting in political history—pulling "low-information" new voters into the body politic and expanding the boundaries of racist, anti-Semitic and misogynistic political speech.

Christoph Bornschein is a German IT consultant who advises German Chancellor Angela Merkel on online privacy and other internet issues. He says the difference between the Obama election strategies and Trump's are in the algorithms and today's advanced AI. The same tools that enable marketers to identify and create groups of "statistical twins," or like-minded people, and then to target ads to sell them shoes, trips and washing machines also enable political strategists to create "echo chambers" filled with slogans and stories that people want to hear, aka fake news.

The segmentation by Facebook's advertising tools of very small, like-minded groups of people who might not have been grouped together helped break the so-called Overton window—the outer limit of acceptable speech in American public discourse. For example, voters known privately—on Facebook—to favor racist or anti-Semitic ideas can also be grouped together and targeted with so-called dark ads written specifically for small groups and not widely shared. In 2016, racist sentiment, white supremacy, resentment of refugees, anti-Semitism and virulent misogyny flooded social media and then leaked out into campus posters and public rallies.

Psychographic algorithms allow strategists to target not just angry racists but also the most intellectually gullible individuals, people who make decisions emotionally rather than cognitively. For Trump, such voters were the equivalent of diamonds in a dark mine. Cambridge apparently helped with that too. A few weeks before the election, in a Sky News report on the company, an employee was actually shown on camera poring over a paper on " The Need for Cognition Scale," which, like the OCEAN test, can be applied to personal data, and which measures the relative importance of thinking versus feeling in an individual's decision-making.

The Trump campaign used Facebook's targeted advertising to identify ever smaller audiences— fireplaces in IT talk—receptive to very precisely targeted messages. This targeting is increasingly based on behavioral science that has found people resist information that contradicts their viewpoints but are more susceptible when the information comes from familiar or like-minded people.

Finding and provoking people who hate immigrants, women, blacks and Jews is not hard to do with Facebook's various tools, and Facebook, while aware of the danger, has, so far, not created barriers to prevent that. It has, however, acknowledged the potential. "We have had to expand our security focus from traditional abusive behavior, such as account hacking, malware, spam and financial scams, to include more subtle and insidious forms of misuse, including attempts to manipulate civic discourse and deceive people," Facebook stated in an April report.

On any given day, Team Trump was placing up to 70,000 ad variants, and around the third debate with Hillary Clinton, it pumped out 175,000 ad variants. Trump's digital advertising chief, Gary Coby, has said the ad variants were not precisely targeted to speak to, say, "Bob Smith in Ohio," but were aimed at increasing donations from disparate small segments of voters. He compared the process to "high-frequency trading" and says Trump used Facebook "like no one else in politics has ever done."

He denied that the Trump camp ever used Cambridge Analytica's psychographics—although clearly, based on the individual Nix outed in his New York City speech, Cambridge had applied the special sauce to Trump voters.

Coby also denied that the campaign was behind the barrage of anti-Clinton ads and propaganda, made in Eastern Europe and in the U.S, precisely targeted to the disaffected people identified by Facebook's tools to be like known Trump voters, in an attempt to suppress the vote among minorities and women. Research suggests that suppression ads and fake news were more effective in determining the outcome of the election than Trump's push ads.

RELATED: Plan to quit? Big Data may tell your boss before you do

Facebook ads targeting by race and gender are not new and are legal, although there have been scandals. Last fall, author and journalist Julia Angwin, whose book Dragnet Nation: A Quest for Privacy, Security, and Freedom in a World of Relentless Surveillance, revealed that housing advertisers were using Facebook's "ethnic affinity" marketing tool to exclude blacks from ads. Facebook promised to build tools to prevent it, but the social media giant has said nothing about using the tool on racially targeted political messages.

A spokesman for Facebook refused to speak on the record about the various allegations and said founder and CEO Mark Zuckerberg would not comment for this article. "Misleading people or misusing their information is a direct violation of our policies and we will take swift action against companies that do, including banning those companies from Facebook and requiring them to destroy all improperly collected data," the spokesman wrote in an emailed message. Facebook's definition of misusing data, the spokesman said, is laid out in its terms, which are lengthy and broadly divided into issues of safety and identity. Nothing in the terms appears to explicitly bar the kind of psychographic analysis Cambridge was doing.

Zuckerberg's last public pronouncement on the Facebook election was in March, when he said, at North Carolina A&T University: "There have been some accusations that say that we actually want this kind of content on our service because it's more content and people click on it, but that's crap. No one in our community wants fake information." He has not spoken publicly about psychographic microtargeting, but as criticism mounted after the election, Facebook has hired 3,000 people to monitor reports of hate speech.

Democratic campaign strategists who spoke with Newsweek acknowledge that Trump's digital strategy was effective, but they don't think it won him the election. "Ultimately, in my opinion, Trump's overall strategy was less sophisticated, not more" than prior years, says Marie Danzig, deputy director for Obama's digital operation in 2012, now with Blue State Digital, a political strategy firm that works for progressive causes. "He focused on large-scale, mass fear-mongering. Social media has become a powerful political platform. That didn't exist two [election] cycles ago, and you can't ignore that. Social media is a perfect vehicle for outlandish statements to rally the base or for disseminating fake news. When you use available psychographic or behavioral data and use it to mislead or make people fear, that is a dangerous game with dangerous results."

Danzig and other Democratic strategists say Facebook's microtargeting abilities, behavioral science and the stores of data held by other social media platforms like Twitter and Snapchat are tools that won't go back inside Pandora's box. They, of course, insist they won't be looking for low-cognition voters high in neuroticism who are susceptible to fear-based messages. But Big Data plus behavioral science plus Facebook plus microtargeting is the political formula to beat. They will use it, and they won't talk about how they will refine and improve it.

Coby predicted that by 2020, more platforms like Google and Facebook will likely come online, and the creation of tens or hundreds of thousands of ad variants will become more programmatic and mechanized. Finally, he predicted that "messenger bots" will become more prevalent and more targeted, so that voters in, say, Ohio, could get answers from a Trump bot about questions specific to them and their communities.

There's a reason Zuckerberg, Cambridge and even Democratic consultants don't want to delve too deeply into the implications of what they are up to, says Eli Pariser, author of The Filter Bubble: What the Internet Is Hiding From You. "There are several dangers here," he says. "One is that when we stop hearing what political arguments are being made to whom, we stop being able to have a dialogue at all—and we're quite close to that microtargeted world already. The other is that it's not hard to imagine a world where we move past making specific intentional arguments to specific psychographic subgroups—where political campaigns just apply a million different machine-generated messages to a million different statistically significant clusters of people and amplify the ones that measurably increase candidate support, without an understanding of what is working or even what is being argued."

Whack-a-Mole Privacy

"People don't understand data," says Travis Jarae, a former Google executive who specializes in securing people's online identities, mainly to protect large companies from hackers and thieves. "People don't understand what bread crumbs they leave around the internet. And our representatives in government don't understand how analytics work." Jarae founded a consulting firm that advises corporations on online identity and security, and he finds the ignorance extends even to officials at financial firms, where trillions of dollars are at stake. "If they don't understand, do you think governments and regular citizens do?"

Big Data technology has so far outpaced legal and regulatory frameworks that discussions about the ethics of its use for political purposes are still rare. No senior member of Congress or administration official in Washington has placed a very high priority on asking what psychographic data mining means for privacy, nor about the ethics of political messaging based on evading cognition or rational thinking, nor about the AI role in mainstreaming racist and other previously verboten speech.

Activists in Europe are asking those questions. Swiss mathematician and data protection advocate Paul-Olivier Dehaye, founder of PersonalData.IO, which helps people get access to data about them, has initiated arbitration with Facebook 10 times for information it collects on him and others. He has written extensively on both Facebook and Cambridge, including instructions on how to apply for the data they collect. "It's a whack-a-mole thing," he says. "It would be wrong to think these platforms are separate. There are companies whose role is to link you across all platforms and companies whose product is exactly to link those things together."

Even industry insiders concede that the implications of data-driven psychographics are creepy. "The possibilities are terrifying," said Greg Jones, a vice president at Equifax, one of the biggest data collectors, who participated in a recent panel discussion in Washington, D.C., on regulating Big Data. "When you look at what [Cambridge] did with the microtargeting, that's kind of a marketer's dream, right? Having the kind of intimacy with your customer that allows you to give them the perfect offer at the perfect time. But their usage of it? Legal, yes. Is it ethical? I don't know. Should some regulation be applied for political purposes, where you can't do microsegmentation and offer people the best offer based on that, whether it be a credit card or the best political party? I think some of these things have to mature, and I think people will decide."

But when? There have been no postelection changes in American privacy law and policy. On the contrary, Trump moved decisively in April toward less privacy and even more commercial data, repealing Obama-era privacy rules that would have required broadband and wireless companies to get permission before sharing sensitive information. Now, companies like Verizon and AT&T can start to monetize data about online activity inside people's homes and on their phones.

After the election, Cambridge Analytica's parent company, defense contractor Strategic Communications Laboratories (SCL), quickly set up an office blocks from the White House and finalized a $500,000 contract from the U.S. State Department to help assess the impact of foreign propaganda, according to The Washington Post . But while the money rolls in, a small but persistent core of outrage has forced the formerly self-promoting Nix and company to turn shy and self-effacing. Publicist Nick Fievet tells Newsweek Cambridge Analytica doesn't use data from Facebook and that when it mines information from Facebook, it is via quizzes "with the express consent of each person who wishes to participate." He also says Cambridge did not have the time to apply psychographics to its Trump work.

After months of investigations and increasingly critical articles in the British press (especially by The Guardian 's Carole Cadwalladr, who has called Cambridge Analytica's work the framework for an authoritarian surveillance state, and whose reporting Cambridge has since legally challenged), the British Information Commissioner's Office (ICO), an independent agency that monitors privacy rights and adherence to the U.K.'s strict laws, announced May 17 that it is looking into Cambridge and SCL for their work in the Brexit vote and other elections.

Other lawyers in London are trying to mount a class-action suit against Cambridge and SCL. Because of the scale of the data collection involved here, astronomical damages could be assessed.

In the U.S., congressional investigations are reportedly looking into whether Cambridge Analytica had ties to the right-wing Eastern European web bots that flooded the internet with negative and sometimes false Clinton stories whenever Trump's poll numbers sagged during the campaign.

American venture capitalists and entrepreneurs are hustling to build websites and apps that can stem the flow of fake news. A Knight Prototype Fund on Misinformation and a small group of venture capitalists are putting up seed money for entrepreneurs with ideas about how to do that. Hundreds of developers attended the first Misinfocon at MIT earlier this year, and more such conferences are planned. Facebook and Google have been scrambling since November devising ways to filter the rivers of fake news.

'They're Not Bullshitting'

After the election, as the scale of the microtargeting and fake news operation became clear, Cambridge and Facebook went on defense. One of the founders of Cambridge even denies to Newsweek that its method works, claiming that psychographics have an accuracy rate of around 1 percent. Nix, the source says, is selling snake oil.

Before journalists started poking around, before privacy activists in Europe started preparing to file suit, before the British ICO office launched its investigation and before a Senate committee started looking into Cambridge Analytica's possible connections to Russian activities on behalf of Trump during the election, Cambridge was openly boasting about how its psychographic capacities were being applied to the American presidential race. SCL still advertises its work influencing elections in developing nations and even mentions on its website its links to U.S. defense contractors like Sandia National Laboratories, where computer scientists found a way to hack into supposedly secure Apple products long before anyone knew that was possible.

New media professor David Carroll from New York City's New School believes Cambridge was telling the truth then, not now. "They are not bullshitting when they say they have thousands of data points."

Speaking to a Big Data industry conference in Washington May 15, fugitive National Security Agency whistleblower Edward Snowden implored his audience to consider how the mass collection and preservation of records on every online interaction and activity threatens our society. "When we have people that can be tracked and no way to live outside this chain of records," he said, "what we have become is a quantified spiderweb. That is a very negative thing for a free and open society."

Facebook has announced no plans to dispense with any of its lucrative slicing, dicing and segmenting ad tools, even in the face of growing criticism. But in the past few weeks, the company has been fighting off denunciations about how its advertising tools have turned it into, as Engadget writer Violet Blue put it, "a hate-group incubator" and "a clean, well-lit place for fascism." Blue published an article headlined "The Facebook President and Zuck's Racist Rulebook" accusing the company of encouraging Holocaust denial, among other offenses, because of its focus on money over social responsibility. The Guardian accused it of participating in "a shadowy global operation [behind] The Great British Brexit Robbery "and has just published a massive trove of anti-Facebook revelations called "The Facebook Files." Two recent books are highly critical of how Facebook tools have been used in recent elections: Prototype Politics: Technology-Intensive Campaigning and the Data of Democracy by Daniel Kreiss, and Hacking the Electorate: How Campaigns Perceive Voters by Eitan Hersh.

When Trump, the first true social media president, appointed his son-in-law, Jared Kushner, as an unofficial campaign aide, Kushner went to Silicon Valley, got a crash course in Facebook's ad tools and initiated the campaign's Facebook strategy. He and a digital team then oversaw the building of Trump's database on the shopping, credit, driving and thinking habits of 220 million people. Now in the White House, Kushner heads the administration's Office of Technology and Innovation. It will focus on "technology and data," the administration stated. Kushner said he plans to use it to help run government like a business, and to treat American citizens "like customers."

The word customers is crass but key. The White House and political strategists on both sides have access to the same tools that marketers use to sell products. By 2020, behavioral science, advanced algorithms and AI applied to ever more individualized data will enable politicians to sell themselves with ever more subtle and precise pitches.

German IT consultant Bornschein says the evolution of using more data points to more precisely predict human behavior will continue unless and until society and lawmakers demand restrictions: "Do we really want to use all capabilities that we have in order to influence the voter? Or will we make rules at some point that all of that data-magic needs to be transparent and public? Whether this is playing out as utopia or a dystopian future is a matter of our discussion on data and democracy from now on."

During and after the past U.S. election, Jeffrey Jay Ruest—a Trump supporter "very low in neuroticism, quite low in openness and slightly conscientious," according to Cambridge Analytica's psychographics, and a man who does indeed care very much about guns—was going about his business, unaware that Alexander Nix had flashed his GPS coordinates and political and emotional tendencies on a screen to impress a ballroom filled with global elites.

Nix displayed Ruest's full name and coordinates at the event in September 2016, although it has been blacked out on YouTube. I found him in May 2017, with the help of Swiss privacy activist Dehaye, and through some of his friends on Facebook, and emailed him a link to the YouTube video of Nix's talk. The Navy veteran and grandfather says he only signed up for Facebook to see pictures of his grandchildren, and he is disturbed by the amount of information about him the strategist seem to have. "They had the latitude and longitude to my house," says Ruest, who lives in a Southern state. "And that kind of bothers me. There's all sorts of wackos in the world and I'm out in the middle of nowhere. When I pulled it up on a GPS locator, it actually showed the little stream going by my house. You could walk right up to my house with that data."

Ruest, who works in operations for a power company, had never heard of psychographic political microtargeting until I called him. "I don't quite know how I feel about that," he says. "They could use it in advertising to convince you to buy things that you don't need or want. Or they can use it to target you. I lean conservative, but I'm very diplomatic in the way I look at things. And I definitely don't want to appear otherwise. I believe everyone has a right to their opinion." He adds that he is already careful about not answering quizzes or responding to anonymous mailers, but he says, "I might try to be a little more careful than I am now. I am really not comfortable with them publishing that kind of data on me."

Too late. Ruest, like almost every other American, has left thousands of data crumbs for machines to devour and for strategists to analyze. He has no place to hide. And neither do you.