- Deepfakes are a type of synthetic content, generated with the aid of artificial intelligence, that can depict scenes and events that did not happen.

- Images and videos can often contain clues and inconsistencies that can expose their artifice, though more advanced versions are much harder to detect.

- Analyzing sources to establish the provenance of a piece of video, audio or text is a simple and effective first step to debunking many existing deepfakes.

- Special programs and tools, some of which use machine learning, exist to help identify deepfakes, though such analysis provides only a degree of certainty and could yield false negatives.

- Educating regulators and political leaders about the issue of deepfakes remains the most pertinent challenge.

A trickle of AI-fueled misinformation has turned into a powerful stream over the past year, with fake photos and videos—from Donald Trump's and Vladimir Putin's "arrest" to the Pope's "gangsta" outfit—highlighting the scope of the problem.

"Deepfake" is an umbrella term for various types of synthetic content, created or altered with the aid of artificial intelligence, which can appear to show events, scenes or conversations that never happened.

These types of creations come in a variety of visual, audial, and textual forms and can feature something innocuous, such as Jim Carrey in The Shining, or far more sinister and dangerous—like the fake videos of Joe Biden's "address to the nation," for example.

Initially, deepfake technology was largely used to generate pranks and involuntary pornography. Now, it is increasingly deployed as a vehicle for misinformation—scientific, medical, financial, and, perhaps most worryingly, political.

Newsweek previously reported on warnings that these technologies already present a real threat and have the potential to upend the democratic process in the 2024 election, with calls growing louder for regulators, big tech, and governments to intervene.

Meanwhile, the general public—the ultimate target and consumer of such material—is mostly left to its own devices with deepfakes. So what can the average social media user do to protect themselves and others from AI-generated hoaxes?

Here are some basic tips and tools Newsweek compiled to help readers navigate the tumultuous waters of digital content.

Core Principles of Deepfake Detection

Experts that spoke to Newsweek on this topic agreed that the primary objective when assessing the authenticity of a piece of content is to scrutinize its provenance.

Sam Gregory, executive director of the Witness Media Lab, which studies deepfakes, suggests the SIFT method: Stop, Investigate the source, Find alternative coverage, and Trace the original.

"People should slow down on clicking the reposting or retweeting button when a piece of media's intent and context is unclear," Gregory told Newsweek.

"Then follow the best practices of media literacy for any suspicious image—potentially AI-generated or not—then find out who created the image, see if there are other versions or perspectives. If it's claimed to be a real scene, and then look to see if there's an actual original that's been manipulated."

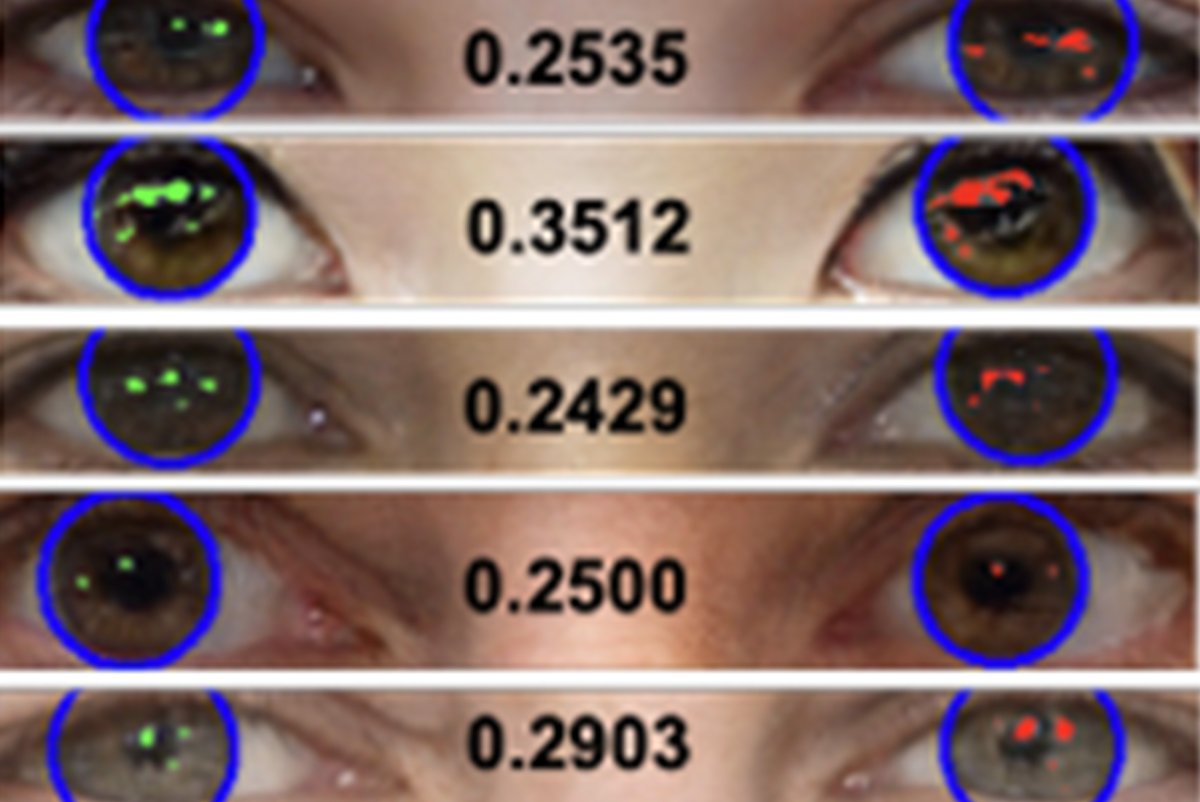

When it comes to more technical solutions, there are, broadly speaking, two streams of deepfake detection algorithms, Siwei Lyu, a University of Buffalo expert on deepfakes, tells Newsweek.

"The first is data-driven methods. These methods are deep neural networks themselves and are trained from a large number of media of original and deepfake nature. After training, the models will have the ability to tell apart real from deepfakes," Lyu said.

"The other stream is evidence-based methods. They use tell-tale signs of deepfakes that researchers observe, and AI algorithms designed specifically to look for such evidence."

The evidence-based methods are easier to understand, although, Lyu warns, their limitation is that deepfake makers can learn and fix the problem, meaning that what might work today may not tomorrow.

Still, many of the general principles of deepfake detection, such as establishing provenance using tools like reverse image searches and looking out for inconsistencies, will still apply and therefore are worth exploring.

Images and Photos

From Stalin-era airbrushing of political enemies from old photos to Photoshop tampering in the 2000s, the alteration of images with the purpose of concealing facts or pushing a false narrative—or just for fun—is nothing new.

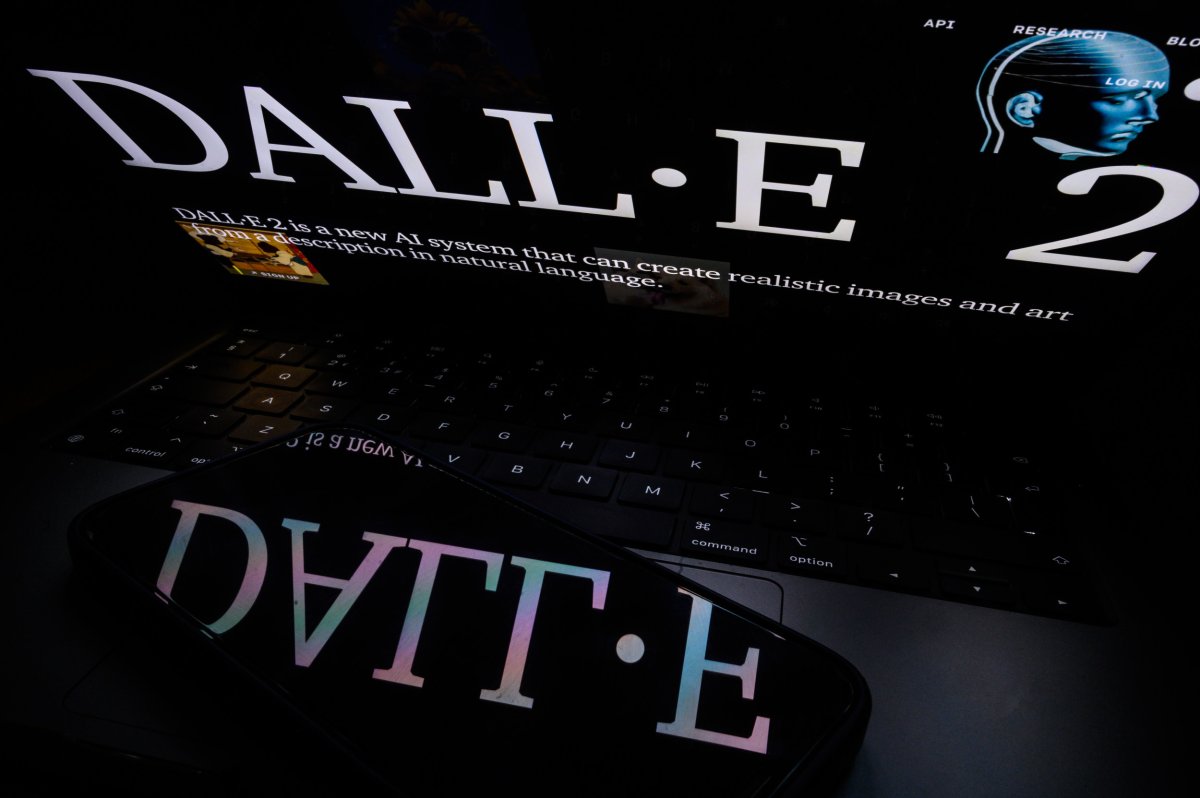

But the practice has become vastly more widespread and effective with the arrival of AI and machine learning-based technologies—Midjourney, DALL-E and Stable Diffusion, to name a few.

These mostly free-to-use tools are widely accessible, though some of the more sophisticated ones are either expensive or not in the public domain. The platforms already have the capacity to seamlessly alter and warp real images, as well as create entirely new ones, whether it be artificial artworks or ultra-realistic human visages.

More worrying still, tools like Midjourney can be used to reimagine real events, or even create entirely fictitious scenes.

Stability AI is excited to announce the launch of Stable Diffusion Reimagine!

— Stability AI (@StabilityAI) March 17, 2023

We invite users to experiment with images and ‘reimagine’ their designs through Stable Diffusion.

More info → https://t.co/lVxsBvwd0u pic.twitter.com/jXsYe8HJ89

So how would one go about identifying and debunking this type of creation? Unfortunately, there is no surefire way of confirming if an image is a "deepfake," but there are ways to spot at least some of them, with varying degrees of certainty.

First, the core principles of fact-checking still apply. That could be as simple as establishing the source, such as by describing the event or scene featured in an image and plugging it into a search engine to see if any reliable outlets have reported on it (and perhaps included other photos, alternate angles or videos).

Reverse image search tools, such as ones provided by Google Lab or TinEye, are handy for finding the source of visual material, as well as revealing composite images that may have been used in its construction. Watermarks are automatically added by many of the publicly available AI image generators, so look out for those too.

Even if the obvious red flags aren't present, this type of content often contains traces of manipulation and AI "tells," which may be hard to spot at first glance.

These could be visual anomalies or inconsistencies, such as a mismatch of skin tone (when a person is pictured), or leftover "artifacts" of digital manipulations, like blurred sections or "warped" lines visible at closer look (an AFP guide provides more insight into artificial image recognition).

"The current state of generative AI means that often there are problems with the hands (for example, distortion), waxy skin, unrealistic shadows, eye reflections that don't make sense in the real world, and objects that appear to blend into other objects outside the laws of physics," Gregory said.

"AI tools also do not do well with directly creating text within an image and won't, for example, necessarily capture the correct logo or tag for a law enforcement officer."

General adversarial networks (GAN) technology has been widely deployed to create "fake faces," a growing problem for social media platforms where these products are used to fabricate accounts and create whole botnets.

While almost impossible to spot in a myriad of real faces, groups of such artificial faces (for example, in a botnet) can be more revealing: the eyes tend to be located on the exact same level, the backgrounds are blurred, objects (like glasses or earrings) are either missing or "cutting" into the skin.

Zooming in on an image can also reveal anomalies or suspicious details, such as inconsistencies in light and color, clothing mismatches or even extra teeth.

Henry Ajder, a deepfake expert and BBC podcast host, details the recent example of the Pope in a white puffer jacket.

AI-generated image of Pope Francis goes viral online. pic.twitter.com/ap7N099wpy

— Daily Loud (@DailyLoud) March 25, 2023

"I would take the image and download it so I can look at it in a bigger frame. I will magnify and further analyze isolated parts of the image for consistencies, inconsistencies, certain tell-tale signs based on the model it could be from," Ajder told Newsweek.

"So in the case of that Pope image, I was immediately looking to see things like the hands, and in this case one of the hands is clearly misformed.

"I was looking at his glasses and saying the frames are not generated in a consistent manner, they're kind of warped, and indeed the shadowing on his face did not match the rest of the frames.

"I was also looking at the overall artificial smoothing quality on the face in particular, which is indicative of the current stylized output a lot of images generated in Midjourney have, because it's a much more aesthetic, artistically-driven, text-to-image tool."

But while this can be effective, we shouldn't rely solely on identifying these kinds of tricks, others warn.

"These systems improve fast, get better and better, and aim to achieve a more realistic look. We know from previous experience that these 'clues' go away quickly—for example, people used to think that deepfakes didn't blink, and now they do," Gregory said.

For the more advanced and challenging cases, there are also publicly accessible digital tools (often themselves built on AI software) designed to detect and debunk deepfakes.

For example, Mayachitra is a deep learning data solution for detecting GAN-sourced images (including the fake faces). HuggingFace is an open-source platform for building AI-based tools, including a deepfake image detector that can estimate the probability of whether a piece of content is AI-generated.

But results sourced from these tools ought not be seen as conclusive either, as they can create false positives and negatives, experts warn.

"In general, detection tools work well targeted to specific tools for making a fake—so if you don't know how it was made, you're at a disadvantage—and best with high-quality images rather than images that may have already circulated online and been reduced in quality," Gregory said.

Video and Audio

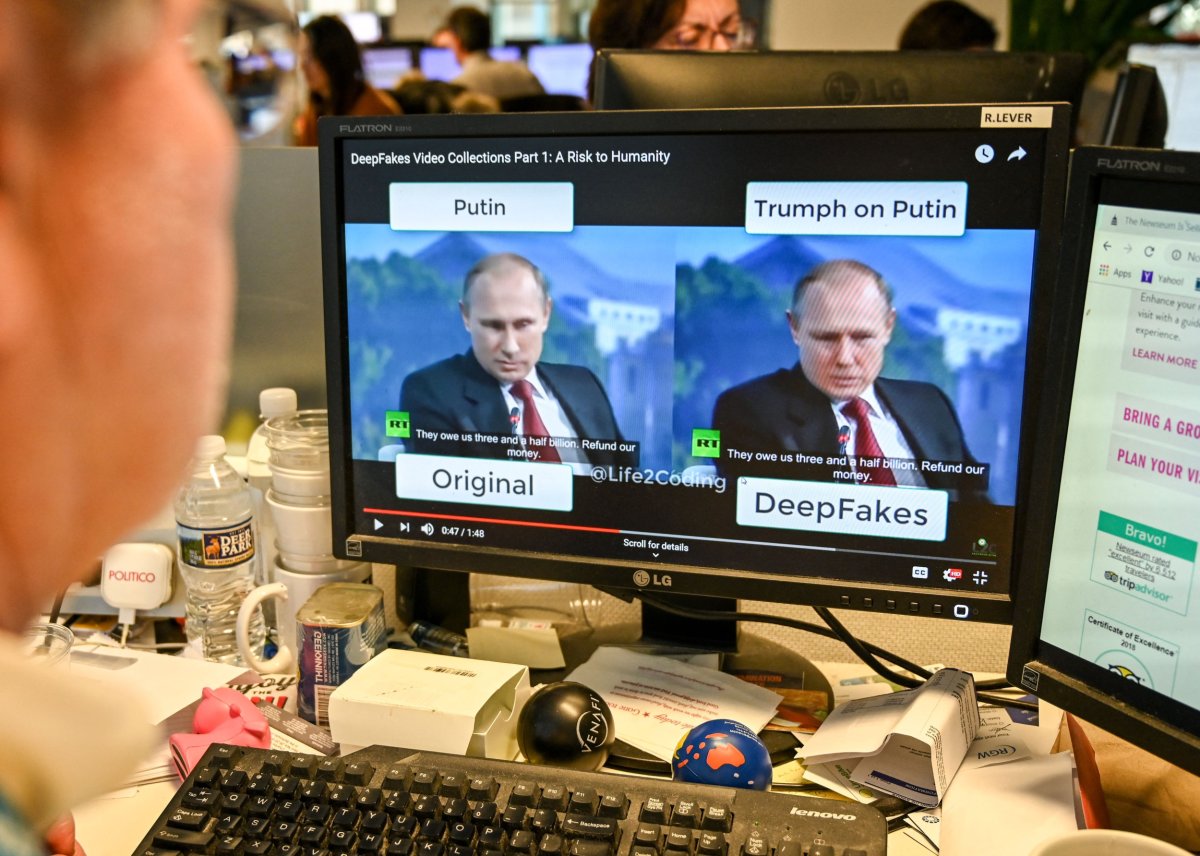

The emergence of deepfake videos is widely perceived as a milestone in the AI space, with the resulting mis- and disinformation threatening to further damage public trust in media and upend national and global politics.

But it merely marks the latest step in an already booming industry.

Taking its roots in CGI and VFX technologies that moviemakers, game developers and disinformation agents alike have used for years, the latest rendition is a significant improvement on poor-quality, unrealistic products often dubbed "shallowfakes."

The early attempts to deploy this technology for misinformation purposes, including in clips featuring prominent celebrities and politicians, were widely mocked and dismissed as amateurish, but still enough for some forward-thinking observers to sound the alarm.

Fast forward to 2023, social media is awash with celebrities deepfaked into porn clips, ultra-realistic videos of President Joe Biden (including his "announcement" about an alien invasion), fake Zoom call participants, fictitious live news segments, and other types of hoaxes that often combine synthetic video with synthetic audio to make the end result nearly indistinguishable from authentic material.

A handful of AI and machine learning-driven programs and software tools, including those completely free and easy to use, are behind the bulk of this type of content—and others are likely already being secretly developed and deployed by governments and disinformation agents around the world.

These can be complemented and enhanced by similar services for audio, including voice cloning tools and synthetic voice generators that are a far cry from the robotic telemarketing callers of the past.

Combined, these allow the creation of entirely fictional scenes and scenarios that are highly convincing. So how can you spot this type of ultra-realistic hoax?

At least in their previous iterations, most deepfake videos tended to exhibit a number of "tells" that make them instantly suspicious, including faults or inconsistencies when it comes to:

- Fine details

- Lights and reflections

- Transitions and movements

- Alignment of audio and video

Some early examples of deepfake videos failed to recreate natural eye movements and blinking, while body shape, posture, and gesticulation could appear awkward or distorted.

Zooming in on the face may be another way to check for imperfections, such as disappearing wrinkles or moles.

Color and shade mismatches are often a telling feature, especially in clips where someone's face was digitally superimposed on another body. Accessories such as earrings, glasses or hats could provide another clue, for example, through misplaced reflections.

Transitions are especially important when identifying deepfake faces in live feeds, such as a Zoom call. While there have already been reported attempts by nefarious actors to infiltrate video conferences by impersonating guests, the face "behind the mask" can often be revealed if they are asked to turn their head and show their profile.

Other artifacts and abnormalities, such as suspicious flickering, distortion or lip-synching malfunctions, can also be a red flag. An unrelated background sound or absence of any audio can be enough to take a pause.

And as with fake photos, there are already multiple technical tools one could use, ranging from metadata analysis and digital forensics to machine learning-powered detection algorithms.

Some examples include DeepWar, Intel's FakeCatcher, and Microsoft's Video Authenticator.

There are limits to how effective these tools are, particularly when it comes to synthetic voice, as audio is generally more difficult to search for and verify than visual content (and the tools that do exist, such as USENIX, may not be available to the wider public yet).

As more advanced methods emerge that eliminate some of these shortcomings in deepfake generation, AI detection software also evolves.

One example is Reality Defender, a "government-grade" AI content detection platform that takes a holistic approach, allowing it to analyze various aspects of a piece of content, such as sound and visuals, to give a percentage estimate of its authenticity. The company also attempts to build highly sophisticated deepfakes to "break" its own models in order to train them and pre-empt.

"A bit like vaccine researchers trying to anticipate the next pandemic, we can proactively generate fingerprints of potential models and signatures before they become a problem," Ben Colman, Co-Founder and CEO of Reality Defender, tells Newsweek. "So a lot of things that appear novel in public are actually already being used in our data sets to train new models."

ChatGPT and Text

The lightning-speed advancement of artificially-generated text is arguably the most rapid, unexpected, and disruptive of the AI innovations.

ChatGPT has been the most widely covered of the AI-based text generation models, but it is by no means the only one, with Microsoft and Google joining the crowded field in recent weeks.

And it is already touted as an existential threat for swathes of industries and professions, as well as the cause of significant disruption in other sectors: academia, politics, journalism, and PR, to name just a few.

The research lab OpenAI launched ChatGPT in November 2022, offering the most up-to-date version of the language model chatbot—an artificial intelligence computer program that engages in human-like conversation in response to a given prompt.

But as thousands of users piled onto the new digital "toy," it soon became clear just how much danger could come from a platform that can generate, freely and at scale, text that is virtually indistinguishable from that written by a human (and which itself could be incorporated into audio-visual "deep" content).

So whether you are a college professor trying to establish if a student had plagiarized from their robotic helper, or a journalist seeking to verify an article or claim, you are faced with the daunting task of attempting to detect and debunk a product of cutting-edge technology. Is it possible?

As recent examples show, because ChatGPT is unrestrained by such inherently human concepts as "honesty" or "factuality," it can very effectively and convincingly generate entirely fictitious content (including made-up events, sources and people) if prompted to do so.

In some cases, that could expose the text's artifice. In a recent example, OSINT (open-source intelligence) researchers were able to identify and flag the use of AI-generated copy in an article because the sources and links it used were not, in fact, real.

So, like with photo and video content, basic due diligence and research would be a good starting point for such a debunk.

But if no obvious red flags are present, ChatGPT-made text can be completely impossible to detect with the naked eye. To help, one may have to turn to other AI-based software, including HuggingFace's AI-source content detection model, or OpenAI's own free tool called "AI text classifier."

Other tools are available too, both free and premium ones, including the likes of ZeroGPT and Copyleaks, while more are in development (by Reality Defender, among others) that would analyze a piece of content on several levels, including visual, audial, and textual.

Still, this kind of software isn't perfect and often comes with caveats and conditions, such as a base character limit and a certain margin of error, with non-zero chance of mislabeling a text as fake or real.

Furthermore, such tools are in short supply for languages other than English, as well as for "hybrid" material where AI-based copy was revised or altered by a human.

Crucially, most of these services and tools operate on the basis of probability and therefore usually cannot provide a conclusive assessment of a piece of text being real or artificial, though that is still better than nothing (and they will continue to improve as the industry develops).

'Don't Trust—But Verify!'

So where does this leave us, the average internet user bombarded with vast amounts of content on a daily basis? Education remains by far the biggest obstacle in tackling this problem, experts admit.

"We need the regulators and elected officials to understand the scale of the problem and develop guidelines, because the industry, which is profit-driven, won't do it on their own accord," Colman says. "They take a very passive, reactive stance, where they only address the issue if it is flagged directly to them."

Educating the public is also necessary, but that too has its limits, says Ajder: "The everyday person probably doesn't have the time or the resources to continuously monitor and check how the models are evolving, what new tools are being released for example, so it is a really challenging landscape. It's not very productive to put the burden on the individual in these cases."

Instead, experts argue it is better to encourage both traditional media literacy and more robust AI media literacy so people can develop a broader understanding of these systems instead of gaining false confidence in their own ability to detect deepfakes.

"For example, it's helpful to know what is increasingly easy to do right now—fake audio, or create realistic images quickly—while some activities, such as realistic full-face deepfakes in complex scenes are still hard to do well, and are unlikely to be used for deceptive videos in, for example, local politics," Gregory said. "This will change over time but is part of the role of the media to keep people informed."

But until then, remaining skeptical, vigilant, and alert to the possibility that a piece of content is fake—and that you may not have the capacity to independently verify it—remains the safest approach.

"The saying was 'trust but verify'; well, now it's 'don't trust—but verify,'" Colman says.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Yevgeny Kuklychev is Newsweek's London-based Senior Editor for Russia, Ukraine and Eastern Europe. He previously headed Newsweek's Misinformation Watch and ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.