This article first appeared on Just Security.

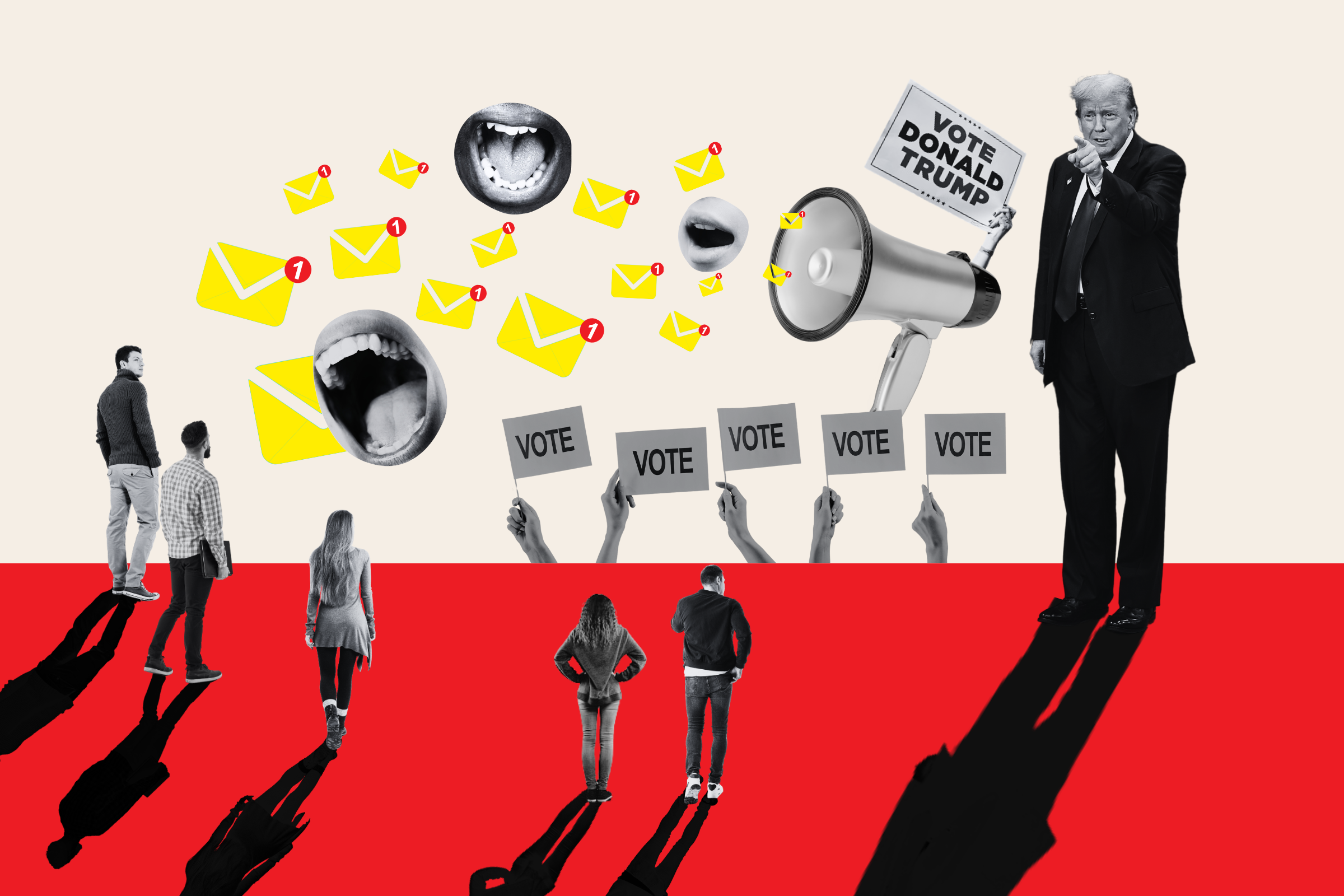

Since Facebook disclosed that at least 150 million Americans were exposed to Russian propaganda on its platform in the run up to the 2016 election, pressure has been growing for the company to demonstrate transparency and notify its users.

During testimony by Facebook General Counsel Colin Stretch on Capitol Hill at the beginning of November, members of Congress called on the social media giant to do just that.

At the same time, I started a public petition calling for notification that swelled to nearly 90,000 signatories.

Stretch argued such a disclosure would be technically difficult, but lawmakers pressed the company to explore it. It was remarkable that he did not come prepared and willing to offer any specifics of such difficulties, and appeared to be saying more that it would be difficult to reach every person rather than difficult to do a lot of the job.

In a strongly worded follow up letter, Senator Richard Blumenthal (D-CT) gave the company an explicit assignment.

"Consumer service entities like yours have long understood their duty to inform their users after mistakes are uncovered," Senator Blumenthal wrote to Facebook CEO Mark Zuckerberg. "You too have an obligation to explain to your users exactly how Russian agents sought to manipulate our elections through your platform." Blumenthal set November 22nd as the deadline for a response from Facebook.

On Nov. 22, the company announced its plan in a blog post entitled "Continuing Transparency on Russian Activity."

"We will soon be creating a portal to enable people on Facebook to learn which of the Internet Research Agency Facebook Pages or Instagram accounts they may have liked or followed between January 2015 and August 2017," the company said. "This tool will be available for use by the end of the year in the Facebook Help Center."

Certainly, this proposal is a step in the right direction, especially for a company that has been slow to divulge details of what ultimately may go down in history as one of the most extensive and effective propaganda campaigns by a foreign adversary against the United States, and also for a company that has in fact made it harder for independent researchers to investigate the problem.

But is it enough? Did Facebook answer Congress's call to notify users?

On balance, the answer is clearly no.

First, the application as described is not a broad notification, but rather a self-service tool tucked away in the "help center" that users must navigate to purposefully. Many users who liked or followed known propaganda pages or accounts may never seek out this information. That must mean a significant drop off in the percentage of people who will be successfully reached.

Facebook has stopped well short of promising to actually notify users with, say, a notice at the top of the timeline of every affected account. Imagine a car manufacturer posting, only in its online help center, information about a defective product without individually notifying the consumers that the company knows full well bought the product.

Second, as the Wall Street Journal 's Jack Nicas pointed out, "the disclosure falls short of notifying more than 100 million other users who came across the pages' ads and posts, and may have liked, shared or commented on them. And it provides little information to those users who did follow the pages."

In short, only providing information to those who directly liked or followed currently known Internet Research Agency sites is only a fraction of the problem and hides the rest.

The tip of the iceberg: liking or following Internet Research Agency's Pages . The rest of the iceberg: viewing, liking, sharing, or commenting on individual ads and organic posts generated by those Pages (even without ever visiting, liking or following the Pages).

Facebook's approach would be tantamount to notifying only people who visited a store that produced mass propaganda mailings, and not notifying all the people who received the propaganda in the mail.

Third, the information appears to be time bound. By limiting the window to January 2015 to August 2017, Facebook may be implicitly shutting down the idea that such notifications will be necessary going forward in perpetuity.

Does the company believe it has fortified its platform against future attacks from Russia or other state actors? Are we to believe it has completed an entirely thorough investigation and that the pages operated by the Internet Research Agency — one of possibly hundreds of troll farms funded by the Russians — were the only ones that reached Americans?

Curiously, the "help center" page depicted in Facebook's announcement doesn't even mention the word "Russia."

That leads to a fourth and final concern: why target only Internet Research Agency pages even in the set time period? As McClatchy reported:

Dozens, if not hundreds of troll networks" supported by Russian operatives are likely operating today, including in countries outside Russia such as Albania, Cyprus and Macedonia, said Michael Carpenter, who specialized in Russia issues as a senior Defense Department official during the Obama administration.

The company will likely argue to do any more would be too technically difficult. But is it really? Facebook makes its billions in profit by tracking exactly what every user does on the platform.

As Krishna Bharat, a former research scientist at Google recently told Fast Company , "it's absolutely not technically difficult." Facebook certainly has far more data than it is suggesting it will provide in this help center application, and by now probably knows about more Russian pages and accounts than are publicly disclosed.

It is likely that Facebook hopes this gesture will be enough to put this issue to bed. They certainly were able to capture some favorable headlines. At the same time, the company is finally beginning to acknowledge that Russian propaganda on the platform targeted British voters ahead of the Brexit referendum.

And a Freedom House report published last week discovered that "online manipulation and disinformation tactics played an important role in elections in at least 18 countries over the past year, including the United States, damaging citizens' ability to choose their leaders based on factual news and authentic debate."

Given the scope of the threat to democracies around the world, it's hard to look at Facebook's latest plan as anything more than a public relations garnish meant to assuage lawmakers on the eve of the Thanksgiving holiday.

Facebook's users — and the democracies that give it the freedom to earn such outsized margins — clearly deserve more.

Justin Hendrix is Executive Director of NYC Media Lab.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

To read how Newsweek uses AI as a newsroom tool, Click here.