Google has announced an incoming update to its "Live Transcribe" app for Android devices will be able to translate sounds from the world into text displays.

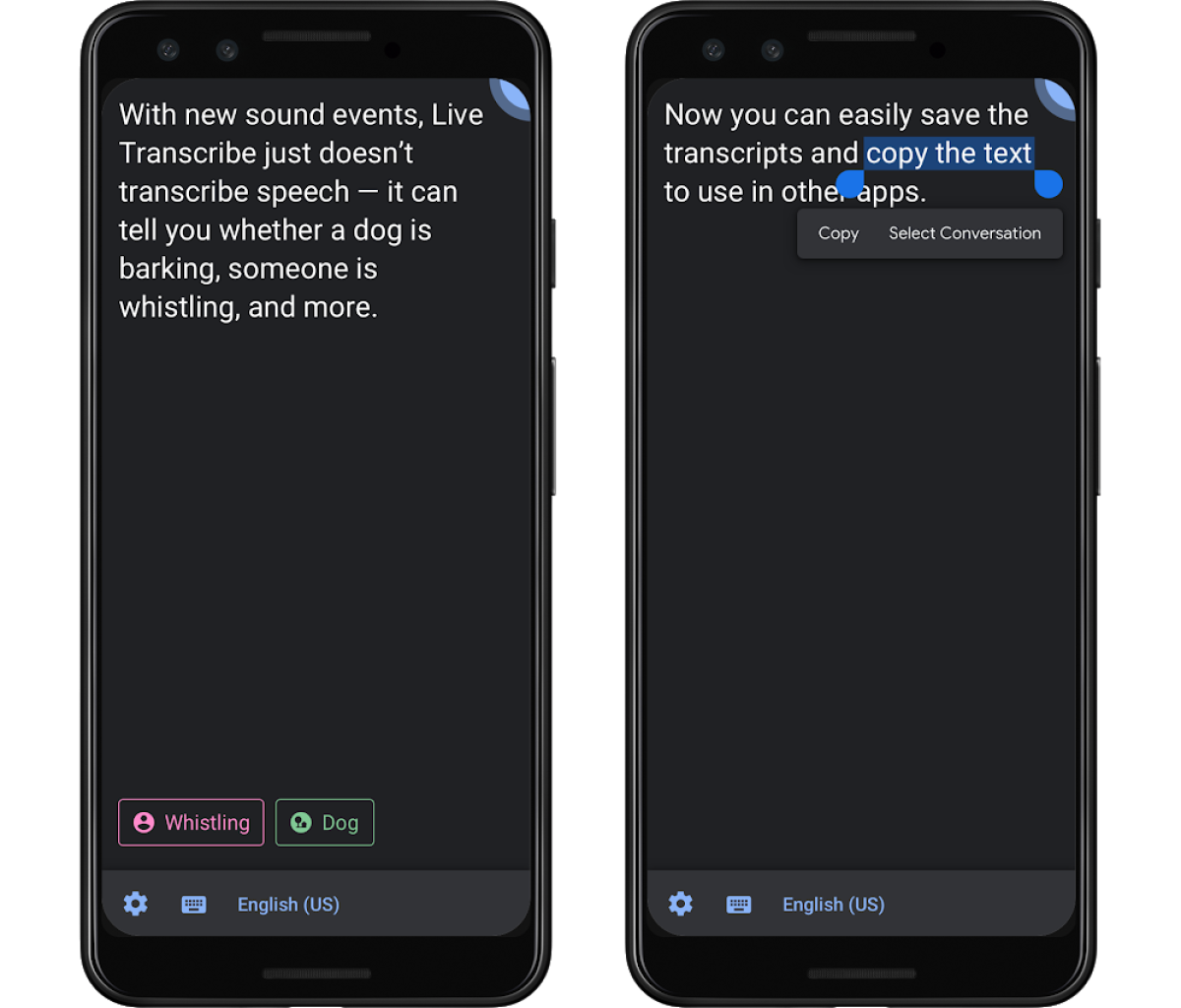

Designed for users who are deaf or hard of hearing, the new version of the app uses machine learning and can show if, for example, a dog is barking or someone is knocking on the front door. In addition, transcripts can now be saved locally on a device for up to three days.

"Seeing sound events allows you to be more immersed in the non-conversation realm of audio and helps you understand what is happening in the world," Android product manager Brian Kemler said. "This is important to those who may not be able to hear non-speech audio cues such as clapping, laughter, music, applause, or the sound of a speeding vehicle whizzing by."

An image showing the app in action indicates the text from a live transcription will appear on the main screen, while background sound event notifications pop up in the bottom left corner. Live Transcribe is available in more than 70 languages.

On Twitter, the official Android account jokingly clarified one important point after some tech journalists questioned the app's machine learning (ML) hearing abilities.

"Is a fart considered a sound event?" queried The Verge's Casey Newton. BBC journalist Dave Lee responded: "Is it weird that I really want to know the answer to this question? Has Google made ML that can detect farts? As it turns out, the app can technically recognise human flatulence—just don't expect it to be available at launch as it would need more testing."

The Android account wrote back saying, "Yes, our ML can do it, but it's difficult acquiring a test data set," referencing the vast quantity of data needed to fine-tune a machine learning algorithm. One Twitter user offered assistance—in the name of science—but didn't get a response.

"We're humbled by the opportunity to build helpful tools that make the world's information more accessible in the palm of everyone's hand," Kemler noted in a Google blog. "As long as there are barriers for some people, we still have work to do. We'll continue to release more features to enrich the lives of our accessibility community and the people around them."

As reported by Android Authority, the technology giant recently unveiled a separate inclusivity feature for hard of hearing users called "Live Caption." It uses artificial intelligence (AI) to translate speech from audio or video that is being played on a smartphone or tablet in real time and then displays the text on the device's screen.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Jason Murdock is a staff reporter for Newsweek.

Based in London, Murdock previously covered cybersecurity for the International Business Times UK ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.