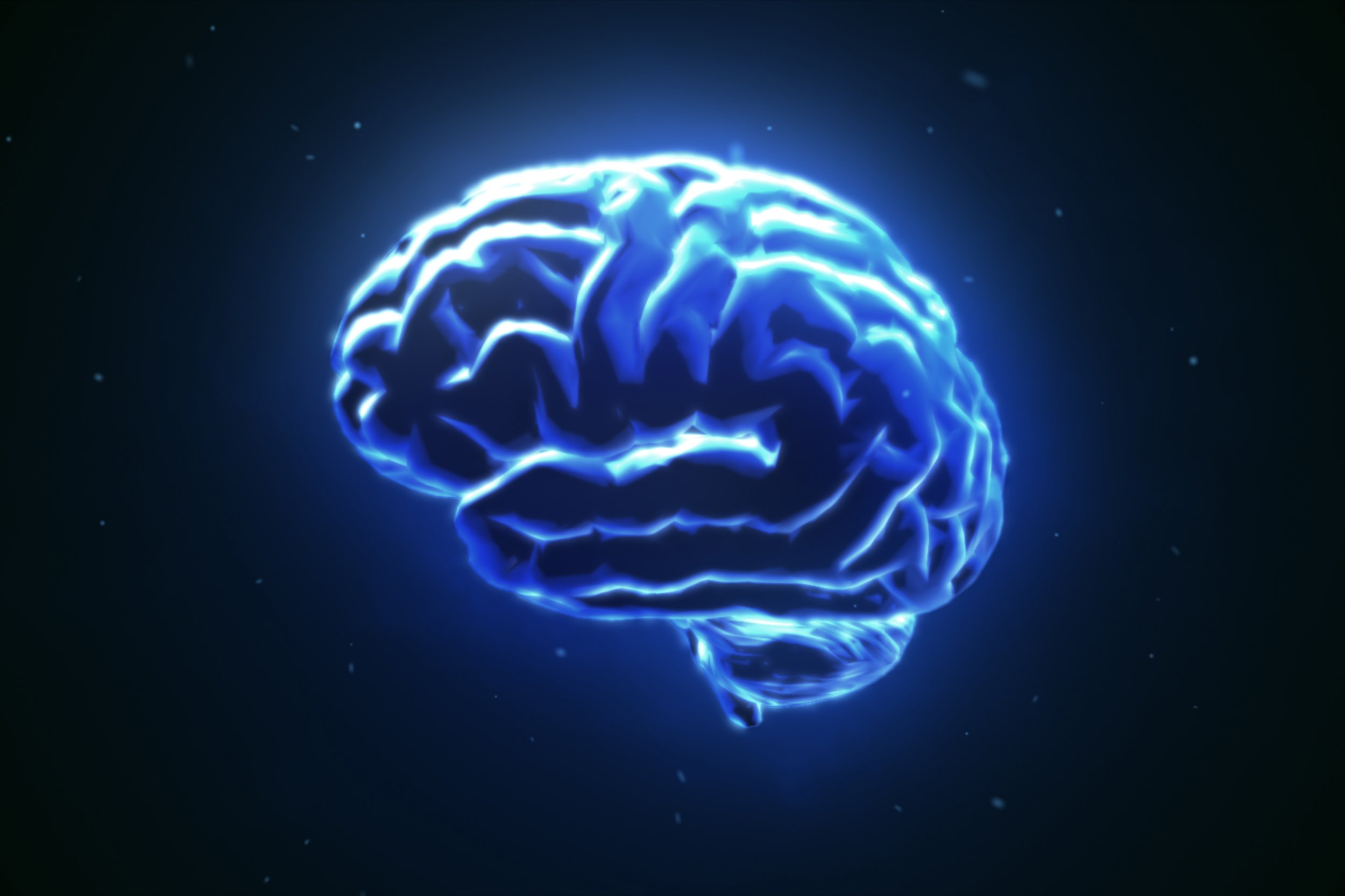

Scientists have developed a device that uses a person's brain activity to power a virtual mouth, which could one day help people who have lost the ability to speak.

A range of conditions can rob a person of speech, from strokes to Lou Gehrig's disease (also known as ALS). Current methods for simulating speech can be very inefficient, such as technology that picks up eye movements so patients can write out their thoughts letter by letter. This can take a painstaking 10 words per minute, versus the 150 words per minute of the average speaker. But it's tough to turn brain activity into speech using machinery, because the act of speaking is a complex physical process involving the lips, jaw, tongue and vocal tract.

Dr. Edward Chang, co-author of the study published in the journal Nature, commented: "For the first time, this study demonstrates that we can generate entire spoken sentences based on an individual's brain activity."

Chang, a professor of neurological surgery and a member of the University of California, San Francisco Weill Institute for Neuroscience, continued: "This is an exhilarating proof of principle that with technology that is already within reach, we should be able to build a device that is clinically viable in patients with speech loss."

The study involved five participants who were able to speak and were visiting the UCSF Epilepsy Center for surgery. They had electrodes inserted into their brains as part of their treatment. The researchers asked them to read several hundred sentences out loud, while the team documented how the part of their brains that plays a role in language responded.

They used the recordings to map how their vocal tract moved as they spoke, and designed a virtual vocal tract for each person. The virtual tract was powered by their brain activity in what is known as a brain-computer interface, using machine learning algorithms. This produced a sound similar to the participant's voice.

To test whether the speech created by the vocal tract could be easily understood, the researchers played it to 1,755 listeners across 16 intelligibility tasks, including transcribing what they heard.

The respondents transcribed 43 percent of sentences accurately, and were able to understand 69 percent of the words spoken on average.

Speech scientist and study co-author Dr. Gopala Anumanchipalli, explained: "The relationship between the movements of the vocal tract and the speech sounds that are produced is a complicated one.

"We reasoned that if these speech centers in the brain are encoding movements rather than sounds, we should try to do the same in decoding those signals."

"We still have a ways to go to perfectly mimic spoken language," Josh Chartier, a bioengineering graduate student in the Chang lab acknowledged. "We're quite good at synthesizing slower speech sounds like 'sh' and 'z' as well as maintaining the rhythms and intonations of speech and the speaker's gender and identity, but some of the more abrupt sounds like 'b's and 'p's get a bit fuzzy.

"Still, the levels of accuracy we produced here would be an amazing improvement in real-time communication compared to what's currently available."

Next, the scientists want to design a system that can be used by a person who can't speak, and therefore won't be able to train the virtual voice.

Chartier continued: "People who can't move their arms and legs have learned to control robotic limbs with their brains. We are hopeful that one day people with speech disabilities will be able to learn to speak again using this brain-controlled artificial vocal tract."

Alexander Leff, a professor of cognitive neurology at the UCL Queen Square Institute of Neurology who was not involved in the study, told Newsweek the two-step decoder was the novel part of the study.

"This works well for spoken speech, but is not perfect as one might hope," he said.

"This is still a long way off being used clinically," he stressed. "Patients who are likely to benefit are those with locked-in syndrome but normal (or near normal) cognition, so it's no good for the more common situation of minimally conscious state." Before that can happen, improvements must be made to how the system processes covert speech, the intelligibility of decoded speech and record from more brain locations, argued Leff.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Kashmira Gander is Deputy Science Editor at Newsweek. Her interests include health, gender, LGBTQIA+ issues, human rights, subcultures, music, and lifestyle. Her ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.