Facebook, Google and Twitter are fundamentally flawed and increasingly vulnerable to disinformation campaigns in the build up to this year's midterm elections, according to a former White House tech advisor.

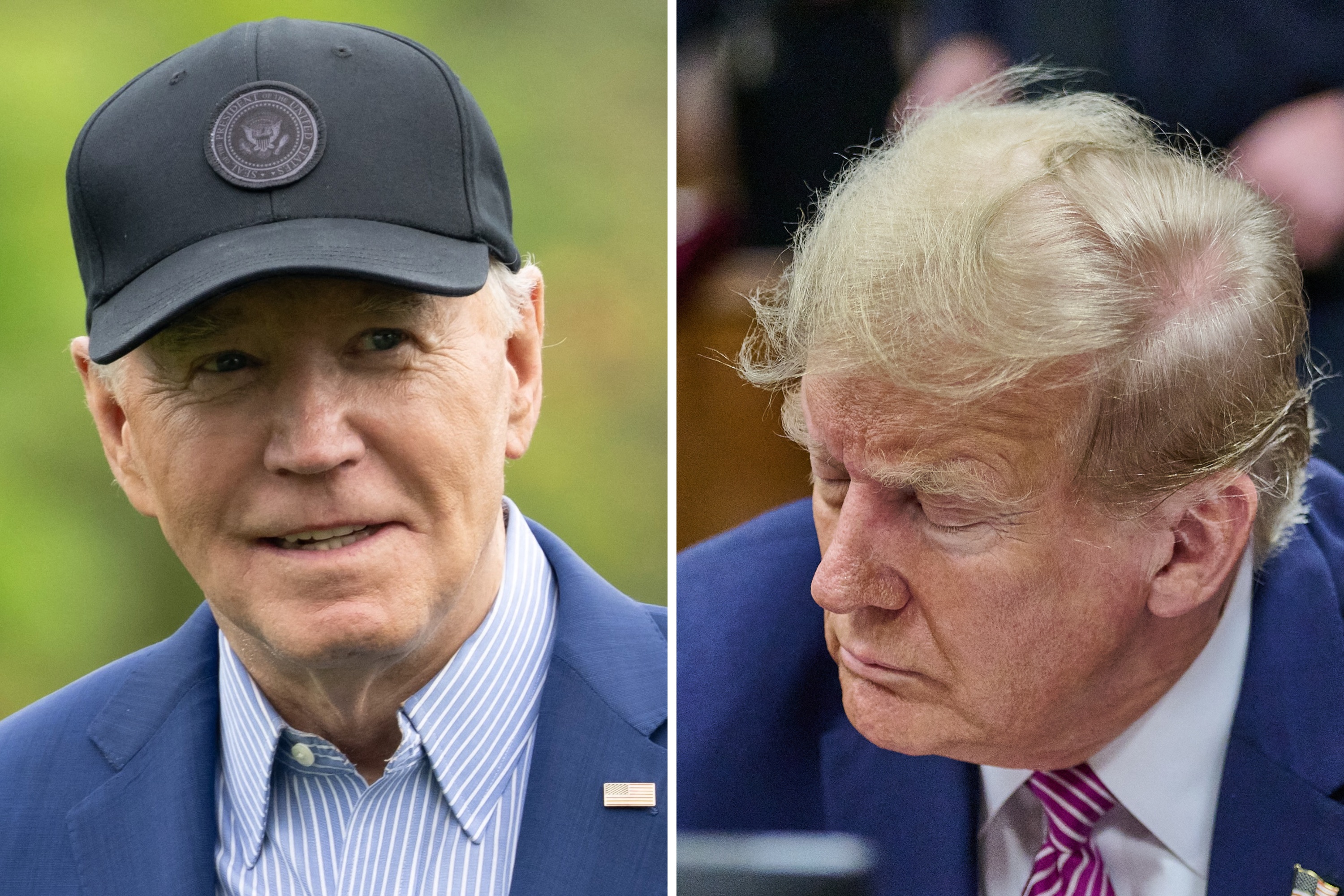

Fears of Russian interference in the upcoming elections come as a grand jury convened by special counsel Robert Mueller indicted more than a dozen Russians involved in manipulating these platforms to broaden political divides in the U.S. and spread disinformation in order to influence the 2016 elections in favor of Donald Trump.

Dipayan Ghosh, who worked as an advisor to President Barack Obama as well as at Facebook during the 2016 election campaign, tells Newsweek that the issue is far broader than a single troll farm in Moscow. The rise of new technologies like artificial intelligence means the spread of fake news and disinformation on social media will likely get worse before it gets better—and not just among Russian agents.

The debate about what is happening at the intersection of social media and democracy has so far been largely speculative, based on assumptions rather than data. With his unique background, Ghosh wanted to inform the conversation through a scientific approach that looked deeper into the broken system's structure.

Together with co-author Ben Scott, Ghosh—who now works at Washington think tank New America—published a report last month detailing how the current system makes disinformation campaigns indistinguishable from legitimate advertisers.

"The underlying lesson from our report is that this isn't about one particular bad actor that we can cut off, cauterize the wound and go back to business as usual," Ghosh says. "These are standard tools of the advertising industry that could be exploited by anyone."

This means that only content that is deemed illegal or a violation of the platforms' terms of service can be filtered out, while sophisticated machine-learning algorithms mean these propaganda posts can be highly targeted at a relatively low cost.

Court documents from the special counsel investigation found that the Russian troll farm had a budget of only $1.25 million per month, yet was so effective that it was potentially able to sway the election.

"With a very small amount of money you can begin to pick and choose your demographics based on data collected," Scott says. "The highly sophisticated algorithmic modeling of audiences allows advertisers—including disinformation operators—to figure out which of the audience segments will be most responsive to campaigns."

While the issue has gained most attention following the 2016 U.S. elections, there is also evidence that Russian-backed groups attempted to sway the U.K. referendum earlier in the same year to dismantle the political unity of the European Union.

Read more: Russian bots have gone global, spreading to 30 countries

Beyond Russia and Europe, a report from Britain-based advocacy group Privacy International described these same tools in analysis of last year's Kenyan elections. According to the report, Kenya's ruling party used social media platforms to identify and target different tribal groups in the country in order to send incendiary messages and content to stoke political divisions.

"It's happening all the time and most of the actors aren't Russian spies or consultants for the Kenyan government, they're everyday political organizations whose communications do not contain illegal content or anything that violates the terms of service," Ghosh says. "But they are highly divisive and corrosive to the political culture because they are contributing to people's separation from facts."

This warning comes as U.S. intelligence chiefs unanimously outlined their conviction that Russia will use the 2018 elections as an opportunity to "exacerbate social and political fissures in the United States." At a Senate Intelligence Committee hearing last week, Director of National Intelligence Dan Coats said Russia would use propaganda, fake news and false-flag personas across social media in order to divide the country and spread political instability.

The sentiments were echoed by Virginia Senator Mark Warner, who said that despite more than a year passing since Trump's election, protections were still not in place to prevent the same thing happening again.

"We've had more than a year to get our act together and address the threat posed by Russia and implement a strategy to deter future attacks," Warner said. "But we still do not have a plan."

However, despite the threat from nefarious foreign actors and a perceived lack of protections on the online platforms that enable them, Republican Senator Jim Risch countered that the U.S. public are educated enough to be able to spot disinformation campaigns. "I think the American people are ready for this," he said. "The American people are smart people."

This is in direct contrast to the finger of blame pointed by Facebook. Following Friday's indictments, Facebook's VP of Ads Rob Goldman once again pointed toward the measures that his company is taking in order to stem such operations in the future, but also blamed a lack of education for U.S. citizens.

There are easy ways to fight this. Disinformation is ineffective against a well educated citizenry. Finland, Sweden and Holland have all taught digital literacy and critical thinking about misinformation to great effect. https://t.co/V0JNvW083W

— Rob Goldman (@robjective) February 17, 2018

For its part, Facebook said it would address the issue by focussing more on local news and trusted sources, while Twitter has emailed users that were targeted with disinformation campaigns. But these are only very small steps toward a solution, Ghosh says.

"It's not enough. I think that all of the companies have acknowledged that this is an ongoing issue that really needs to be addressed. There's going to be a need for a combination of regulatory reform and corporate policy pivots to address this issue more broadly."

Free speech protections prevent these platforms from simply banning disinformation campaigns, while their global reach means regulation in one country will not necessarily lead to the homogenization of rules around the world.

For their part, Ghosh and Scott now plan to delve deeper into the analysis in order to come up with more specific recommendations for regulatory reforms that could prove effective in the coming years.

"We don't have specific answers to these questions yet and we think that it requires significant further research to fully understand," Scott says.

"It will almost certainly get worse. It's a complicated system and there's not one magic silver bullet that's going to solve everything."

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Anthony Cuthbertson is a staff writer at Newsweek, based in London.

Anthony's awards include Digital Writer of the Year (Online ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.