Given recent developments in artificial intelligence (AI), it appears that we are now likely on the cusp of economic and societal upheaval akin to, or perhaps even eclipsing, what was brought about by the Industrial Revolution. Over the coming decades, all aspects of our lives will be affected, from health care and education to transportation and communication, making it essential that we enter this new era with an appreciation for how impactful today's decisions will be on tomorrow's world. Far from being an impartial equalizer, AI could well exacerbate and solidify existing inequalities if we aren't careful. As such, a greatly underappreciated concern when it comes to AI is the presence and impact of developer bias.

Developer bias refers to the unconscious or even deliberate influence that creators have on the AI systems they design. This in turn affects the outputs produced by these systems in that they operate on those very biases their creators carried with them. Simply put, when the design of a system is biased, the results thereof will reflect it.

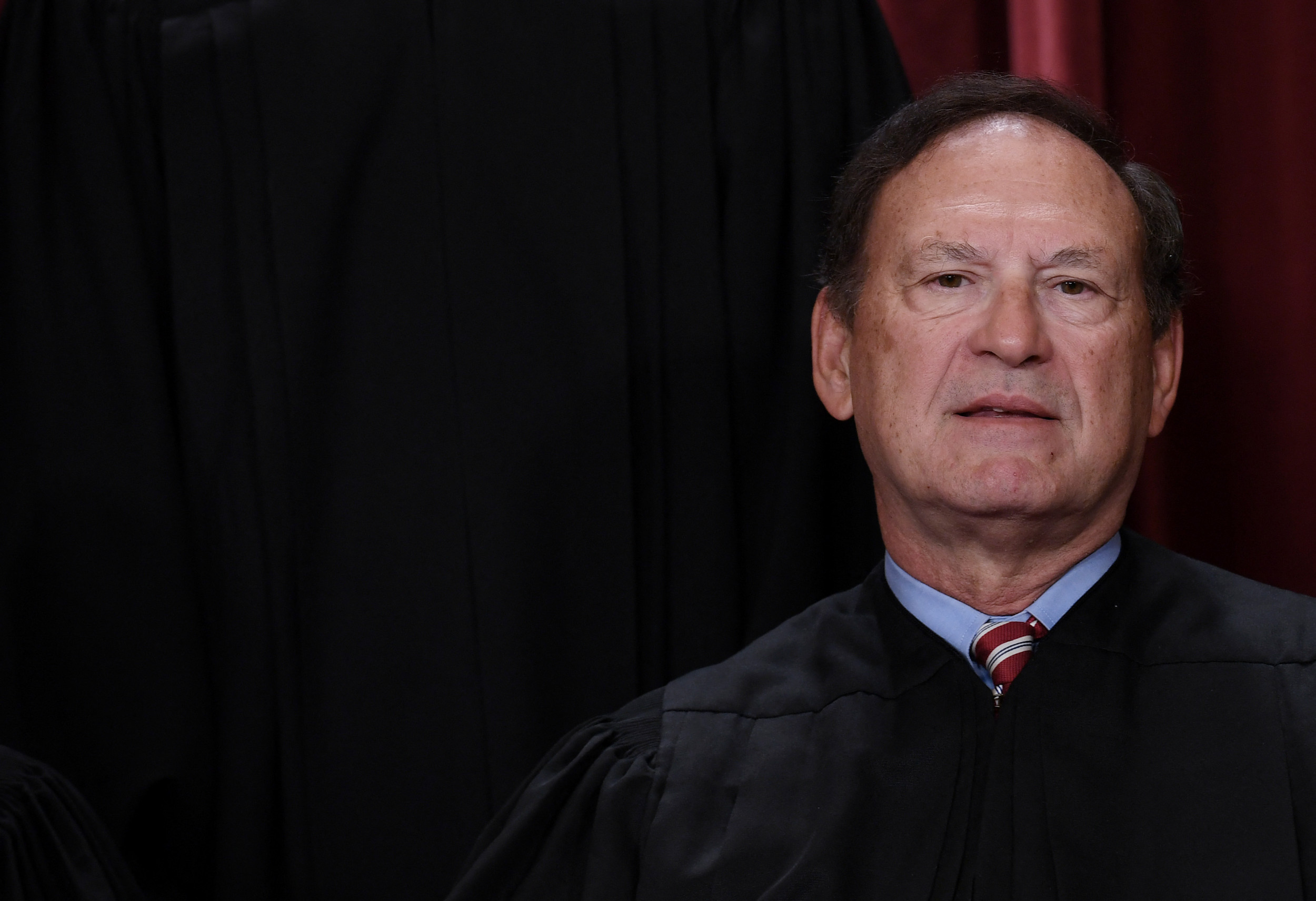

This bias is particularly prevalent in the realm of religion and politics, where moral and ideological values are inescapable parts of the equation. Take for example how AI chatbot ChatGPT has a liberal bias, writing poems admiring President Joe Biden on command while refusing to do so for former President Donald Trump. Should large language models writ large carry this same sort of bias and be employed to write the bulk of our news stories, the media landscape would be inexorably tilted in one ideological direction. While conservatives could always later build and deploy their own internationally conservative large language models, the inertia carried by an initial wave of liberal bots might well make would be competitors about as successful as those companies that have sought to displace the likes of Google in the search engine market or Amazon in online retail.

Beyond simple political tilt, developer bias has the potential to systematically undermine fairness and impartiality across society. This is especially true in areas like law enforcement and the criminal justice system, where AI is already being used to make decisions that have life-altering consequences with reliably racist results. Results such as this will, deservedly, continue to erode public confidence in AI technology and lead to a backlash against its use and development.

The potential for creativity and progress of AI itself is also at stake. When AI algorithms are shaped by the biases of a few individuals they are inherently unrepresentative of the diverse perspectives and experiences of society. This can result in AI that is narrow-minded and lacking in imagination, greatly limiting its potential. The wisdom of the crowd will be what maximizes AI's positive impact on the world, not the genius of a restricted handful of developers in Silicon Valley.

Luckily, developer bias can be overcome. The first step is for the architects of AI to be aware of their own biases and to take active steps to mitigate them. This can be achieved through regular training, education, and self-reflection. Intentional neutrality ought to be the ideal we strive for when creating AI and this will never be possible if we allow own personal issues to cloud the code.

However, even if AI developers themselves are totally unbiased, they will continue to produce biased systems unless they identify and correct the ways in which the underlying data their algorithms work from are a product of bias. Algorithms should then be intentionally trained on diverse and inclusive data sets when able. Moreover, forethought must be placed on how AI can correct for biased data in just the same manner that a human can be taught to identify and correct for misleading information.

Lastly, a strong ethical framework must be put into place within AI companies. Given how impactful we expect AI to be, it is important that they strive to ensure this technology benefits all members of society. Just as an ounce of prevention is worth a pound of cure, strong ethical guidance from the start will minimize the need to pursue costlier corrections in the future.

Nicholas Creel is an assistant professor of business law at Georgia College and State University.

Gavin Incrocci is an undergraduate student at Georgia College and State University who is studying cryptocurrency and cyber-law under professor Creel.

The views expressed in this article are the writers' own.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.