The current AI frenzy is focused on generative AI technologies like ChatGPT, DALL-E, and Gemini that create original writing, images, computer code, and even music. In medicine, people are throwing GPT at every problem under the sun, testing it as a scribe that can help doctors complete medical records, a chatbot that can offer public health advice, and a tool to diagnose patients and recommend treatment.

While many of these applications are promising, GPT is not the catch-all health care solution people want it to be. As a whole, AI has enormous potential to transform medicine. But we can do so much more with it if we use the right AI tools for each task and carefully combine them with existing scientific knowledge.

What's Missing from Our Current Conversation

In my lab at Boston University, we use AI to map the brain and better understand disorders like autism and epilepsy. There are two big things I believe we're missing in our rush to apply generative AI in health care:

1) We need the right kinds of data in the right amounts.

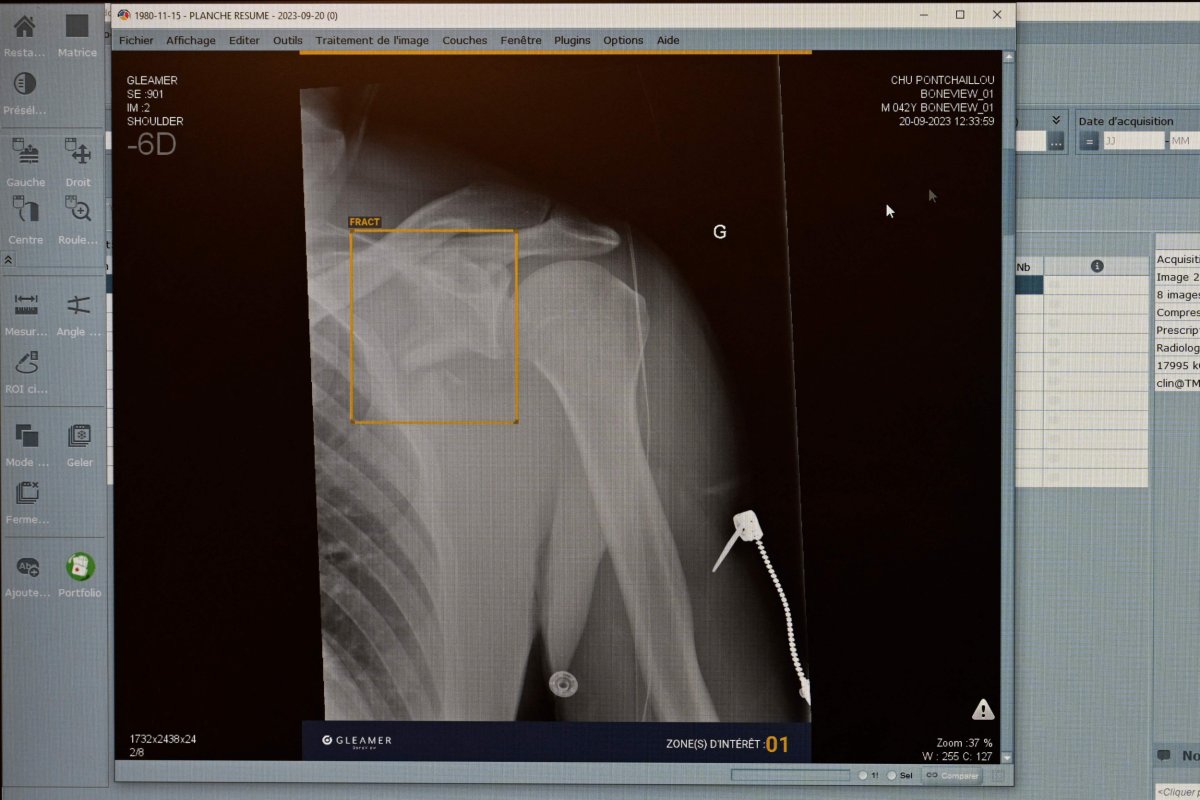

Not every tool is right for every task. GPT and other large language models (LLMs) are text-based. But a lot of medicine relies on spatial or visual data like imaging scans, genetic data, and photographs that can't be easily boiled down to text. The AI I use in my research, for example, is more akin to computer vision models than LLMs.

A second issue is the amount of data we have. Models like GPT and DALL-E are trained on massive caches of text and images pulled from the entire internet. But in many branches of medicine, data is far more expensive and time intensive to collect. Running a single MRI costs hundreds of dollars and can take an hour to complete. Not to mention the costs to bring the patient to an MRI center, staff to run the MRI, and infrastructure to support the equipment.

We don't have an internet's worth of images of what different people's brains look like to train a model like GPT. Aggregating data across hospitals has its own problems, for example, by introducing biases that actually worsen performance. Instead, we rely on streamlined AI architectures and use existing biological and clinical knowledge to shape our models.

2) We need to combine data with existing scientific knowledge.

In my lab, we developed an algorithm that can detect epileptic seizures and pinpoint where they are happening in the brain. The algorithm uses an AI technique called deep learning to identify patterns in electroencephalography (EEG) data that show electrical activity in the brain. In testing, it beat existing state-of-the-art seizure detectors that don't use deep learning.

The key difference between our work and existing tools is that we treat the seizure as an evolving process, bringing in what we know about how seizure activity spreads in the brain, and use AI architectures that capture this propagation. This approach allows us to make robust predictions from relatively small datasets.

Imagine trying to learn a language by plopping yourself down in a foreign country—this is a rough approximation of how generative AI is trained. Building existing science into our AI models is akin to having someone explain the alphabet and grammar rules to you first, then setting you loose to soak up the language.

The Real Promise of AI in Medicine

The brain is strikingly complex, with nearly 100,000,000,000 neurons that interface across trillions of neural connections. A single scan of someone's brain or sequencing of their genome contains a huge amount of data. AI supercharges our ability to wade through this data and recognize patterns.

One of our projects uses biologically-inspired AI architectures to analyze fMRI brain imaging and genetic data and identify connections that might underlie inheritable neurological disorders like schizophrenia. In the past, scientists would focus on a few pre-identified brain areas and genetic markers that they believed corresponded to a particular disorder. AI allows us to analyze someone's entire brain and genome at once to discover patterns we didn't know were there.

AI will revolutionize medicine in incredible ways. While generative AI will certainly have an impact, if we focus too much on it, we will miss out on all the other ways AI can help us understand the human brain and body.

Doctors, scientists, and funders shouldn't be taken in by the hype or distracted by flashy applications with limited clinical value. We should keep our focus on using the right AI tools for the job and building on existing clinical knowledge to create truly transformative science.

Dr. Archana Venkataraman is an associate professor in Boston University's College of Engineering, where she uses AI to study the brain and develop new approaches to treating brain disorders like autism, epilepsy, and schizophrenia.

The views expressed in this article are the writer's own.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

To read how Newsweek uses AI as a newsroom tool, Click here.