Marcel Just, a psychologist at Carnegie Mellon University, tries to take pictures of human thoughts. He was giving a talk about the way concepts are physically represented in the brain, when his colleague, David Brent, a psychiatrist, asked him: Did he think he could detect changes in the thoughts of people who are suicidal?

Just decided it was worth a shot. The two teamed up, and the answer, they concluded, was yes. In a paper published today inNature Human Behaviour, their team investigated whether concepts like "death" and "life" were represented differently in the brains of people who had suicidal thoughts than in the brains of those who did not.

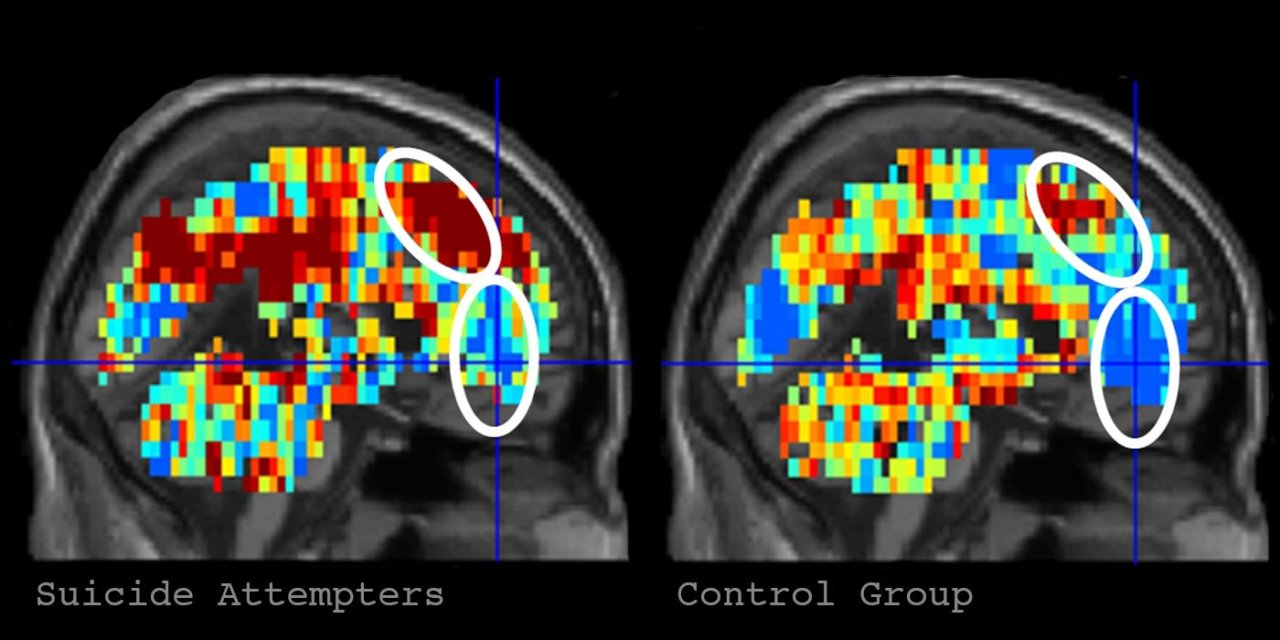

Just's group looked at 34 people: 17 who'd had suicidal thoughts and 17 who hadn't. The researchers monitored each participant's brain by functional magnetic resonance imaging (fMRI) as they were shown a series of words that represented different concepts. Some ("carefree," "comfort," "bliss") were positive; others ("apathy," "death," "desperate") were related to suicide; and others still ("boredom," "criticism," "cruelty") were negative and unrelated to suicide.

The brain "signatures" of the people who had thought about suicide looked significantly different when presented with those suicidal concepts. Of them, "death" was the most discriminating.

The researchers then trained a machine-learning algorithm on this data. The researchers "taught" the algorithm by showing it the fMRI data and the classification (whether or not the individual had thought about suicide) from all participants except for one. After the algorithm was trained, the researchers gave it the fMRI data of the missing participant without telling it whether or not the person had suicidal thoughts. Using what it learned, the algorithm determined whether or not that mystery participant had had suicidal thoughts. It did this with 91 percent accuracy.

It's important to note that this study doesn't show a way of predicting who will and won't die by suicide. But the research, Just says, is heading in that direction.

"It opens that possibility," Glenn Saxe, a psychiatrist at NYU School of Medicine who was not involved with the study, said, noting that such a predictor "could really powerfully add to the tool kits psychiatrists have." However, Saxe also cautions restraint alongside the interest: "You don't want to go beyond what this is saying."

And for a question as high-stakes as suicide, accuracy is hugely important. That's another point of caution that Paul Sajda, a researcher in neuroimaging and computing at Columbia University, emphasized to Newsweek. Sajda noted that while the algorithm is 91 percent accurate, for something like suicide, any inaccuracy is still a big deal.

As Sajda put it: "What's the cost of missing two individuals that are in fact strong in suicidal ideation, and what's the cost of labeling one of the controls as suicidal?"

Sajda also raised the issue that when other metrics the researchers used to understand participants' emotional state were accurate on their own, it wasn't clear what added value a test like this would have.

Just and his team are attuned to the risks and the need to address them going forward. He told Newsweek there are many steps that would have to be achieved before this information could be used in assessing patients. For one, he'd like to replicate his findings with a larger number of people: 34 is a small number for an analysis like this, though willing participants are hard to come by. Also, sitting someone in an fMRI for 30 minutes is neither practical nor cheap.

He also stressed that anything that comes out of this research would strictly be an add-on to assessments by a therapist or psychologist. "That's the gold standard against which we compare it."

As for any fears of a Minority Report situation gone awry, Just insists that given how much work this takes, "there's no way this can be done against a person's will." At least for now, "You can't point a little laser beam at them and find out what they're thinking."