There is an inconvenient truth in technology: The amount of data keeps growing exponentially, while the increases in the power of computers are slowing down.

Somebody needs to invent a new way for computers to work—different from the programmable electronic machines that have dominated since the 1950s. Otherwise, we'll either get overrun by data we can't use or we'll end up building data centers the size of Rhode Island that suck up so much electricity they'll need their own nuclear power plants.

And, seriously, does the world want Google to have nuclear capabilities?

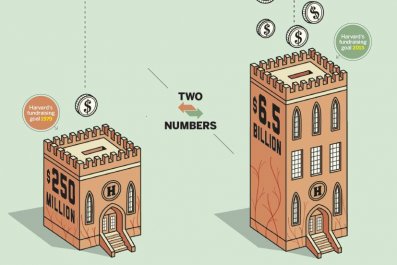

The widening gap between data and computing explains why IBM in July said it will invest $3 billion on research into new types of computer processors. It also helps explain why D-Wave, a tiny Canadian company working on quantum computer technology that at first glance seems as plausible as growing bacon on trees, announced $28 million in funding from Goldman Sachs, Silicon Valley venture firm Draper Fisher Jurvetson and the Business Development Bank of Canada.

Sooner or later, IBM or D-Wave or some other upstart is going to invent computing's version of a jet, and all the other computers everywhere in the world will suddenly seem like trains.

The explosion of data is already old news. Put 3 billion people on the Internet, mix with a boom in mobile apps and sensors going into everything, and stir. You get a concoction that generates so much data that the nomenclature for the amounts sounds as if it was borrowed from Dr. Seuss. Today's zettabytes are becoming tomorrow's yottabytes, and the day after tomorrow we'll have, like, blumbloopabytes.

This would be OK if computers could keep up and process the increasing flows of data in a timely manner. But they can't. One of the problems lies in the computer chips that do all the processing. For 50 years, technologists have relentlessly been able to make the chips smaller, denser and faster. Moore's Law, which works more like a suggestion than an incontrovertible law of nature, noted that chip performance could double every 18 months or so. As long as that stayed true, computer hardware ran ahead of anything we wanted computers to do.

But we've shrunk chips to nearly their physical limits, just a few atoms wide. If the chips can't get smaller and denser, computers can't get faster and more powerful at the rates we're used to. And at this point, if the chips get much smaller and denser, they'll disappear.

The other problem is the fundamental architecture of just about all of today's computers, an architecture first described in the 1950s by mathematician John von Neumann. In the von Neumann architecture, software programs and data are stored separately, and the programs pull in the data and process it by carrying out instructions one step at a time, like a linear math equation.

Computers today can carry out those instructions in nanoseconds, but they still do it one step at a time, which has become a liability known as the von Neumann bottleneck. If individual chips can no longer be made faster, and the amount of work computers have to do continues to grow, the only way to attack ever larger data problems with von Neumann programmable computers will be to build more computers and ever bigger data centers.

The serial architecture of computers is nothing like—and much less efficient than—the architecture of brains. A brain stores data and instructions together, and reprograms itself all the time (i.e., learning). It processes massive amounts of stuff at the same time instead of serially, all on a trickle of electric power. Even von Neumann recognized and wrote about the contrast between his architecture and brain architecture just before he died, and suggested that future computers would have to work more like brains.

A good deal of IBM's $3 billion investment is going toward developing brain-inspired chips and architecture. The company has been funding research in that area for a few years, contributing to a grand project called SyNAPSE (Systems of Neuromorphic Adaptable Scalable Electronics) that's funded by the U.S. military. Several other companies and universities are also contributing to SyNAPSE.

The goal set by SyNAPSE is a computer chip that can act like a brain with 100 million neurons. That would create a chip that falls somewhere between a rat's brain, with its 55 million neurons, and a cat's brain, with its 760 million neurons. And that's still a far cry from human brains, which boast 20 billion neurons, minus losses from tequila blackouts in Puerto Vallarta. Though maybe that's just my excuse.

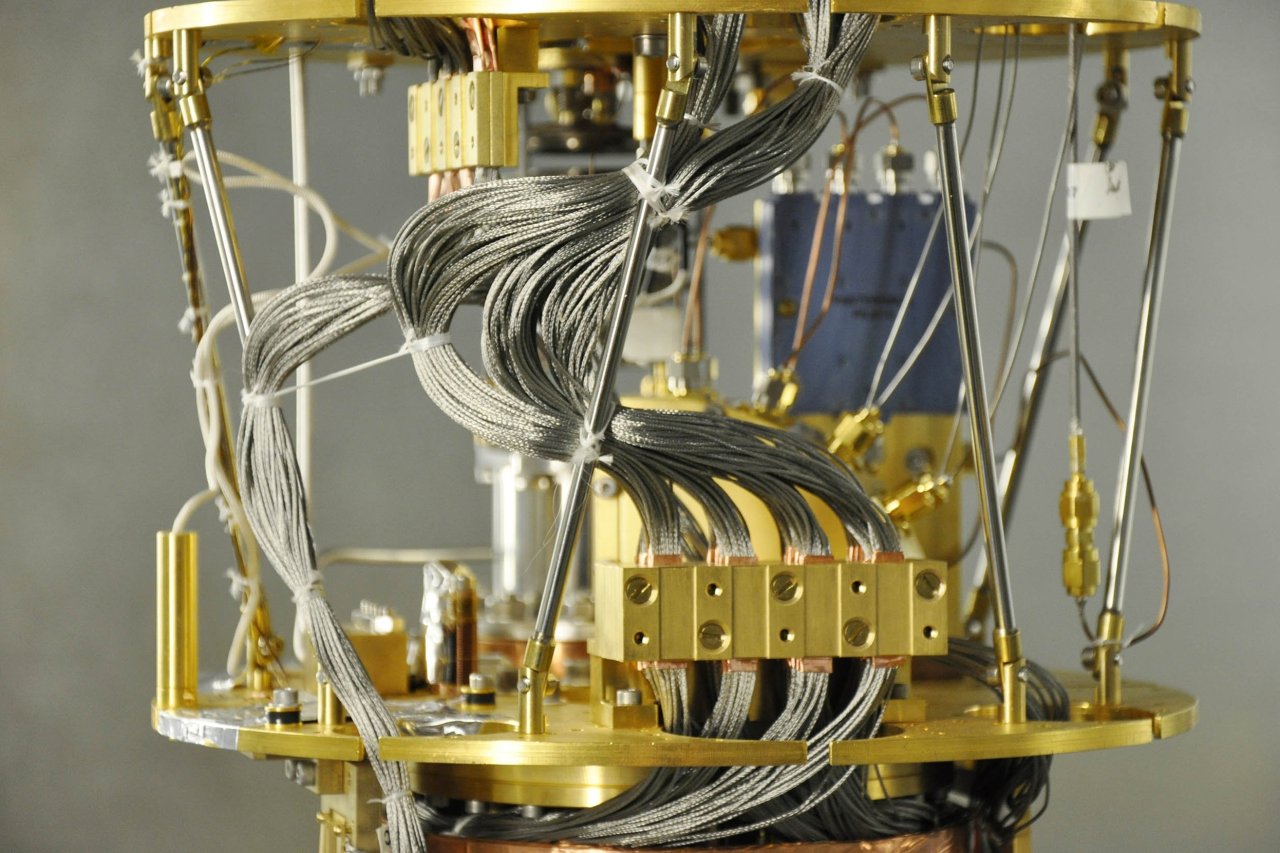

D-Wave is pursuing a different solution to the von Neumann bottleneck. Labs all over the world are working on quantum computing. D-Wave is one of the only companies already promising to commercialize it. The basic idea is to use the weirdness of quantum physics to get atoms to make calculations. A dozen atoms in a quantum computer would be more powerful than the world's biggest supercomputer.

All this is promising, but there's a basic problem with quantum or brain-inspired computers: They're still lab projects. D-Wave's prototype quantum computer may not be all that quantum, the way Batman might not be a superhero since he doesn't really have superpowers. The SyNAPSE project is still years from getting to a rat's brain. Mathematicians don't even yet know how to write algorithms that take advantage of the all-at-the-same-time processing we're promised in cognitive and quantum computers. IBM Research chief John Kelly often says that, based on today's scientific work, true cognitive and quantum computers will come into being sooner than expected, but we're still talking another decade or more away.

In the meantime, if the trend lines for data and computing power continue in their current directions, we could end up either choking on data or getting buried under data centers. China is already building a data center triple the size of any in the U.S. It will be as big as the Pentagon.

One of these suckers the size of Rhode Island can't be far behind.